PhD Dissertation

Continuous-Time Modeling Using

Lévy-Driven Moving Averages

Representations, Limit Theorems and Other Properties

Mikkel Slot Nielsen

Department of Mathematics

Aarhus University

2019

Continuous-time modeling using Lévy-driven moving averages

Representations, limit theorems and other properties

PhD dissertation by

Mikkel Slot Nielsen

Department of Mathematics, Aarhus University

Ny Munkegade 118, 8000 Aarhus C, Denmark

Supervised by

Associate Professor Andreas Basse-O’Connor

Associate Professor Jan Pedersen

Submitted to Graduate School of Science and Technology, Aarhus, July 3, 2019

Dissertation was typesat in kpfonts with

pdfL

A

T

E

X and the memoir class

DEPARTMENT OF MATHEMATICS

AARHUS

UNIVERSITY

AU

Contents

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . v

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Resumé . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Introduction 1

1 A Wold–Karhunen type decomposition and the Lévy-driven

moving averages . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

2 Dynamic models for Lévy-driven moving averages . . . . . . . 6

3

Limit theorems for quadratic forms and related quantities of

Lévy-driven moving averages . . . . . . . . . . . . . . . . . . . 16

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Paper A Equivalent martingale measures for Lévy-driven moving

averages and related processes 23

by Andreas Basse-O’Connor, Mikkel Slot Nielsen and Jan Pedersen

1 Introduction and a main result . . . . . . . . . . . . . . . . . . . 23

2 Further main results . . . . . . . . . . . . . . . . . . . . . . . . . 26

3 Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

4 Proofs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Paper B Stochastic delay differential equations and related

autoregressive models 45

by Andreas Basse-O’Connor, Mikkel Slot Nielsen, Jan Pedersen

and Victor Rohde

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

2 The SDDE setup . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3 The level model . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

4 Proofs and technical results . . . . . . . . . . . . . . . . . . . . 58

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Paper C Recovering the background noise of a Lévy-driven CARMA

process using an SDDE approach 69

by Mikkel Slot Nielsen and Victor Rohde

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

2 CARMA processes and their dynamic SDDE representation . . 70

i

3 Estimation of the SDDE parameters . . . . . . . . . . . . . . . . 74

4 A simulation study, p = 2 . . . . . . . . . . . . . . . . . . . . . . 75

5 Conclusion and further research . . . . . . . . . . . . . . . . . . 77

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Paper D Multivariate stochastic delay differential equations and CAR

representations of CARMA processes 81

by Andreas Basse-O’Connor, Mikkel Slot Nielsen, Jan Pedersen and

Victor Rohde

1 Introduction and main ideas . . . . . . . . . . . . . . . . . . . . 81

2 Notation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

3 Stochastic delay differential equations . . . . . . . . . . . . . . 85

4 Examples and further results . . . . . . . . . . . . . . . . . . . . 86

5 Proofs and auxiliary results . . . . . . . . . . . . . . . . . . . . 93

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

Paper E Stochastic differential equations with a fractionally filtered delay:

a semimartingale model for long-range dependent processes 107

by Richard A. Davis, Mikkel Slot Nielsen and Victor Rohde

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

2 Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

3 The stochastic fractional delay differential equation . . . . . . . 112

4 Delays of exponential type . . . . . . . . . . . . . . . . . . . . . 116

5 Simulation from the SFDDE . . . . . . . . . . . . . . . . . . . . 120

6 Proofs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

7 Supplement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Paper F Limit theorems for quadratic forms and related quantities of

discretely sampled continuous-time moving averages 137

by Mikkel Slot Nielsen and Jan Pedersen

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

2 Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

3 Further results and examples . . . . . . . . . . . . . . . . . . . 142

4 Proofs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

Paper G On non-stationary solutions to MSDDEs: representations and

the cointegration space 159

by Mikkel Slot Nielsen

1 Introduction and main results . . . . . . . . . . . . . . . . . . . 159

2 Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

3

General results on existence, uniqueness and representations

of solutions to MSDDEs . . . . . . . . . . . . . . . . . . . . . . . 163

ii

4 Cointegrated multivariate CARMA processes . . . . . . . . . . 168

5 Proofs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Paper H Low frequency estimation of Lévy-driven moving averages 181

by Mikkel Slot Nielsen

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

2 Estimators of interest and asymptotic results . . . . . . . . . . 183

3 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

Paper I A statistical view on a surrogate model for estimating extreme

events with an application to wind turbines 193

by Mikkel Slot Nielsen and Victor Rohde

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

2 The model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

3 Application to extreme event estimation for wind turbines . . . 198

4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

iii

Preface

This dissertation is the result of my PhD studies carried out from May 1, 2015 to

July 3, 2019 at Department of Mathematics, Aarhus University, under supervision

of Andreas Basse-O’Connor (main supervisor) and Jan Pedersen (co-supervisor). My

studies were fully funded by Andreas’ grant (DFF–4002–00003) from the Danish

Council for Independent Research.

The dissertation consists of the following nine self-contained papers:

Paper A

Equivalent martingale measures for Lévy-driven moving averages and

related processes. Stochastic Processes and their Applications 128(8), 2538–

2556.

Paper B

Stochastic delay differential equations and related autoregressive models.

Stochastics (forthcoming), 24 pages.

Paper C

Recovering the background noise of a Lévy-driven CARMA process using

an SDDE approach. Proceedings ITISE 2017 2, 707–718.

Paper D

Multivariate stochastic delay differential equations and CAR representa-

tions of CARMA processes. Stochastic Processes and their Applications (forth-

coming), 25 pages.

Paper E

Stochastic differential equations with a fractionally filtered delay: a semi-

martingale model for long-range dependent processes. Bernoulli (forthcom-

ing), 30 pages.

Paper F

Limit theorems for quadratic forms and related quantities of discretely

sampled continuous-time moving averages. ESAIM: Probability and Statistics

(forthcoming), 20 pages.

Paper G

On non-stationary solutions to MSDDEs: representations and the cointe-

gration space. Submitted.

Paper H Low frequency estimation of Lévy-driven moving averages. Submitted.

Paper I

A statistical view on a surrogate model for estimating extreme events with

an application to wind turbines. In preparation.

Up to notation and minor adjustments, Papers A–H align with their published or

submitted version. Main parts of Papers A–C are written during the first two years of

my PhD studies, and thus these were also included in my progress report used for

the qualifying examination, after which I obtained a master’s degree in mathematical

economics. While a few of the ideas of Papers D and F were briefly discussed in the

v

Preface

progress report as well, Papers D–I are primarily a result of the last two years of

my studies. I have contributed comprehensively in both the writing as well as the

research phase of Papers A–B and D–H. Together with Victor Rohde I have written

Papers C and I, and to these we have contributed equally.

The first chapter of the dissertation is an introduction, which motivates the use of

Lévy-driven moving averages in the modeling of continuous-time stochastic systems

and discusses the importance of obtaining knowledge of their representations, limit

theorems and certain other properties. The findings of Papers A–H deliver answers

to many of the questions raised in this discussion, and hence the main results of

these papers will also be highlighted in this chapter. Paper I, however, is an industrial

collaboration with Vestas Wind Systems A/S and concerns estimation of extreme

loads on wind turbines using covariates. Since the details are carefully explained in

the included paper and the overall aim differs from that of Papers A–H, I have chosen

not to address its findings in the introductory chapter.

My four years of PhD studies have been both challenging and rewarding, and I

owe several people huge thanks for making the journey joyful. First of all, I thank my

main supervisor Andreas Basse-O’Connor for giving me the unique opportunity of

pursuing a PhD degree in a truly inspiring and intellectually stimulating research

environment and for our many fruitful discussions. His support, enthusiasm and

high ambitions have definitely pushed my limits as a researcher. A special thanks

goes to my co-supervisor Jan Pedersen, with whom I have had uncountably many

conversations spanning from technical details in proofs and general probabilistic

and statistical considerations to an analysis of the outcome of yesterday’s hockey

match. Due to his extraordinary guidance, his trust in my abilities and his positive

mindset, Jan has had a significant impact on my development and well-being during

my studies. I feel honored that Andreas and Jan have invested this much time and

effort in me—it exceeds by far what could be expected of a supervisor, and for this I

am deeply grateful.

I would also like to thank my co-author Richard A. Davis from Department of

Statistics, Columbia University, for letting me visit him and his group in New York and

for his interest in my research. Our frequent meetings and his generous hospitality

ensured that I had a constructive and pleasant stay. I thank as well my office mate

Victor Rohde for numerous fruitful collaborations, and the local L

A

T

E

X expert Lars

‘daleif’ Madsen and my office mate Mathias Ljungdahl for helping me with the

technical typesetting. Furthermore, I want to thank my colleagues at Department

of Mathematics, Aarhus University, for giving me a perfect working environment,

which I have enjoyed being a part of throughout my studies. A particular thanks goes

to Claudio Heinrich, Julie Thøgersen, Mads Stehr, Mathias Ljungdahl, Patrick Laub,

Thorbjørn Grønbæk and Victor Rohde for all the (non-)mathematical discussions and

social activities.

Finally, my family and friends deserve an abundance of gratitude for their endless

support and encouragement. I conclude with a very special thanks to my fiancée

Marianne, since none of this would have been possible without her.

Mikkel Slot Nielsen

Aarhus, July 2019

vi

Summary

Similarly to the discrete-time framework, moving averages driven by white noise

processes play a crucial role in the modeling of continuous-time stochastic processes.

The main purpose of this dissertation is to address various aspects of Lévy-driven

moving averages. The existence of equivalent martingale measures, autoregressive

representations and limit theorems will be of particular interest.

Based on earlier literature on the semimartingale property for Lévy-driven moving

averages, and under rather general conditions on the Lévy process, we give necessary

and sufficient conditions on the driving kernel for an equivalent martingale measure

to exist. In particular, these conditions extend previous results for Gaussian moving

averages to the symmetric α-stable case with an arbitrary α ∈ (1, 2].

A significant part of the dissertation concerns various properties of solutions to

a range of stochastic delay differential equations (SDDEs). Among other things, we

obtain sufficient conditions for existence and uniqueness of solutions to univariate,

multivariate, higher order and fractional SDDEs, provide moving average represen-

tations of the solutions and discuss its memory properties. A few implications of

the obtained results are that (i) invertible continuous-time ARMA processes can

be viewed as unique solutions to SDDEs, (ii) solutions can be semimartingales and

exhibit long memory at the same time, and (iii) cointegration can be embedded in

multivariate SDDEs in a straightforward manner. From the properties that we prove

for SDDEs we draw several parallels to classical results for autoregressive representa-

tions in the discrete-time literature and, hence, indicate that it may be reasonable to

think of SDDEs as the continuous-time counterpart.

We also study the limiting behavior of quadratic forms and related quantities of

discretely sampled Lévy-driven moving averages. The linear nature of Lévy-driven

moving averages and their tractable probabilistic structure allow us to obtain rather

explicit conditions on the driving kernel and the coefficients of the quadratic form

ensuring asymptotic normality. The result differs from those obtained in related

literature due to the quite delicate interplay between discrete-time sampling and

continuous-time convolutions. The applications of these asymptotic results are many;

in particular, we demonstrate how they can be used to obtain central limit theorems

when estimating the driving kernel parametrically using least squares.

The last part of the dissertation is related to an industrial collaboration, where

we consider prediction of extreme loads on wind turbines using only a number of

covariates and a simulation tool. In particular, we discuss how to set up a statistical

model in this situation, address some of the key assumptions and, finally, check its

performance on real-world data.

vii

Resumé

Præcis som for modeller i diskret tid spiller glidende gennemsnit drevet af hvid støj

en fundamental rolle i modelleringen af stokastiske processer i kontinuert tid. Hoved-

formålet med denne afhandling er at undersøge forskellige aspekter af Lévy-drevne

glidende gennemsnit. Vi vil være særligt interesserede i eksistens af ækvivalente

martingalmål, autoregressive repræsentationer og grænseværdisætninger.

Baseret på tidligere litteratur om semimartingal-egenskaben for Lévy-drevne

glidende gennemsnit, og under ret svage antagelser på Lévy-processen, giver vi nød-

vendige og tilstrækkelige betingelser på den drivende kerne, der sikrer, at et ækvi-

valent martingalmål eksisterer. Som et specialtilfælde af dette resultat opnår vi en

generalisering af resultater for gaussiske glidende gennemsnit til det symmetrisk

α-stabile tilfælde for et vilkårligt α ∈ (1,2].

En stor del af afhandlingen omhandler forskellige egenskaber ved løsninger til en

række stokastiske differentialligninger, som involverer processens egen fortid (disse

vil herfra kort refereres til som SDDEer). Vi giver tilstrækkelige betingelser til at

sikre eksistens og entydighed af løsninger til en- og flerdimensionale SDDEer, SD-

DEer af højere orden og fraktionelle SDDEer. Desuden repræsenterer vi løsningerne

som glidende gennemsnit samt studerer deres afhængighedsstruktur. Umiddelbare

konsekvenser af disse resultater er, at (i) invertible ARMA processer i kontinuert tid

er entydige løsninger til SDDEer, (ii) løsninger kan være semimartingaler og have

lang hukommelse på samme tid, og (iii) kointegration kan nemt indlejres i de fler-

dimensionale SDDEer. Fra de beviste egenskaber for SDDEer trækker vi adskillige

paralleller til klassiske resultater for autoregressive modeller i diskret tid og indikerer

på den måde, at SDDEer kan opfattes som modstykket i kontinuert tid.

Vi studerer også den asymptotiske opførsel af kvadratiske former og relaterede

størrelser af diskrete observationer fra Lévy-drevne glidende gennemsnit. De gliden-

de gennemsnits lineære struktur samt transparente fordelingsmæssige egenskaber

gør det muligt for os at udlede eksplicitte betingelser på den drivende kerne og

koefficienterne i den kvadratiske form, som sikrer asymptotisk normalitet. På grund

af det udfordrende samspil mellem diskrete observationer og foldninger i kontinuert

tid adskiller resultatet sig fra dem, der er udledt i lignende litteratur. Sådanne asymp-

totiske resultater har mange anvendelser: f.eks. viser vi, hvordan de kan bruges til at

udlede centrale grænseværdisætninger ifm. parametrisk estimation af den drivende

kerne ved brug af mindste kvadraters metode.

Afhandlingens sidste del er relateret til et industrielt samarbejde, hvor vi studerer

estimation af ekstreme belastninger på vindmøller ved brug af en række kovariater

samt et simuleringsværktøj. Her diskuterer vi, hvordan man kan formulere en fornuf-

tig statistisk model samt belyser de væsentligste antagelser. Endeligt undersøger vi,

hvordan modellen klarer sig på data fra virkeligheden.

ix

Introduction

This chapter motivates the study of Lévy-driven moving average processes, highlights

key results obtained in the included papers and addresses their relation to existing

literature. In Section 1 we discuss why Lévy-driven moving averages constitute a

convenient class for modeling a wide range of stochastic systems in time by relying

on a Wold–Karhunen type decomposition, and we review some of their properties.

This leads naturally to a discussion of the key findings of Paper A. Section 2 concerns

the specification of the deterministic kernel driving the moving average. Specifically,

by drawing parallels to the discrete-time literature on ARMA type equations, we mo-

tivate the continuous-time ARMA processes as well as solutions to certain stochastic

delay differential equations. These are all special cases of moving averages, which

have formed the foundations of Papers B–E and G, and hence we end the section

by giving an overview of the main contributions of each of these papers. Finally, in

Section 3 we discuss the relevance of limit theorems for quadratic forms and related

quantities of Lévy-driven moving averages and relate it to Papers F and H.

1 A Wold–Karhunen type decomposition and the Lévy-driven

moving averages

There may be many reasons for modeling stochastic processes continuously in time.

To give an example, financial data are nowadays sampled at both very high and

irregular frequencies, and the continuous-time specification is a way to model this

type of observations in a consistent manner. Another reason is due to the remarkable

result of Delbaen and Schachermayer [18], which essentially characterizes arbitrage

opportunities in a financial market driven by semimartingales in terms of the exis-

tence of a so-called equivalent martingale measure (cf. Paper A). For further examples

on the use of continuous-time models, see [1, 8] and [22, Section 1.2].

Suppose now that (

X

t

)

t∈R

is a centered and weakly stationary (continuous-time)

process, that is,

E[X

2

t

] < ∞, E[X

t

] = 0 and

h 7−→E[X

t+h

X

t

]

=

h 7−→E[X

h

X

0

]

C γ

X

(1.1)

for all

t ∈ R

. While some phenomena may be reasonably described by such (

X

t

)

t∈R

,

one may often need to transform, deseasonalize and/or detrend observations to

align with such assumptions (see [11, Section 1.4] for details on this). A classical

example is the evolution of a stock price (

S

t

)

t∈R

exhibiting the random walk behavior

lim

t→∞

Var

(

S

t

) =

∞

, while its

∆

-period log-returns

X

t

=

logS

t+∆

−logS

t

,

t ∈ R

, might

approximately meet

(1.1)

. A related example is the (log-)prices of two stocks (

S

1

t

)

t∈R

and (

S

2

t

)

t∈R

, which individually may wander widely, but the spread

X

t

=

S

1

t

−S

2

t

,

t ∈ R

,

1

Introduction

behaves in a stationary manner. Such a situation can happen if the two stocks are

very similar by nature and one would in this case refer to them as being cointegrated

(see also Paper G). Despite the fact that the class of processes satisfying

(1.1)

is large

and general, Theorem 1.1 shows that these conditions are not far from ensuring that,

up to a term that can be perfectly predicted from the remote past (in an

L

2

(

P

) sense),

they correspond to moving averages driven by white noise processes. In the result it

will be required that (

X

t

)

t∈R

is continuous in

L

2

(

P

) or, equivalently,

γ

X

is continuous

at 0. Under this assumption it follows by Bochner’s theorem that there exists a finite

and symmetric Borel measure

F

X

, usually referred to as the spectral distribution of

(X

t

)

t∈R

, which has characteristic function γ

X

:

γ

X

(h) =

Z

R

e

ihy

F

X

(dy), h ∈ R. (1.2)

In the formulation,

f

X

refers to the density of the absolutely continuous part of

F

X

and sp denotes the L

2

(P) closure of the linear span.

Theorem 1.1 (Karhunen [28]).

Suppose that (

X

t

)

t∈R

is a centered and weakly station-

ary process, which is continuous in

L

2

(

P

). Moreover, suppose that the Paley–Wiener

condition

Z

R

|logf

X

(y)|

1 + y

2

dy < ∞ (1.3)

is satisfied. Then there exists a unique decomposition of (X

t

)

t∈R

as

X

t

=

Z

t

−∞

g(t −u) dZ

u

+ V

t

, t ∈ R, (1.4)

where

g : R → R

belongs to

L

2

, (

Z

t

)

t∈R

is a process with weakly stationary and orthogonal

increments satisfying

E

[(

Z

t

−Z

s

)

2

] =

t −s

for all

s < t

, and (

V

t

)

t∈R

is a weakly stationary

process with

V

t

∈

T

s∈R

sp{X

u

:

u ≤ s}

for

t ∈ R

. Moreover, if

F

X

is absolutely continuous

with a density f

X

satisfying (1.3) then V

t

= 0 for all t ∈ R.

The stochastic integral in

(1.4)

is defined as an

L

2

(

P

) limit of integrals of simple func-

tions. While the proof of Theorem 1.1 can be found in [28, Satz 5–6], the formulation

of the result is borrowed from [5, Theorem 4.1]. It is straightforward to verify that a

converse of Theorem 1.1 is also true: if

g ∈ L

2

and (

Z

t

)

t∈R

is a process with weakly

stationary and orthogonal increments satisfying E[(Z

t

−Z

s

)

2

] = t −s for s < t, then

X

t

=

Z

t

−∞

g(t −u) dZ

u

, t ∈ R, (1.5)

satisfies

(1.1)

, and

γ

X

can be represented as

(1.2)

with

F

X

(

dy

) = (2

π

)

−1

|F

[

g

](

y

)

|

2

dy

.

Here

F

[

g

] denotes the Fourier transform of

g

; we define it as

F

[

g

](

y

) =

R

R

e

−ity

g

(

t

)

dt

for g ∈ L

1

, y ∈ R, and extend it to functions in L

1

∪L

2

by Plancherel’s theorem.

Loosely speaking, the above considerations show that weakly stationary processes

correspond to causal moving averages of the form

(1.5)

and, thus, it would be natural

to focus on modeling

g

and (

Z

t

)

t∈R

. Note that, unless (

X

t

)

t∈R

can be assumed to be

Gaussian, in which case (

Z

t

)

t∈R

is a standard Brownian motion,

(1.5)

does not reveal

anything about (

X

t

)

t∈R

beyond its second order properties. In particular, for a general

noise process (

Z

t

)

t∈R

, the relation

(1.5)

leaves us with no insight about the path

2

1 · A Wold–Karhunen type decomposition and the Lévy-driven moving averages

properties and the probabilistic structure of (

X

t

)

t∈R

. For instance, to assess properties

of estimators based on (

X

t

)

t∈R

, it is necessary to have a better understanding of its

dependence structure. This should indicate that, while the overall moving average

(convolution) structure can possibly produce a wide class of interesting processes,

we should require that (

Z

t

)

t∈R

is a particularly nice process. Natural candidates are

provided by the extensively studied class of Lévy processes ([6, 9, 34]), since these will

allow us to keep track of the entire distribution of the process while maintaining the

same second order properties. Since Lévy processes have stationary and independent

increments, the use of these can be seen as the continuous-time equivalent of using

i.i.d. noise rather than just uncorrelated noise in a discrete-time setting.

Recall that a one-sided Lévy process (

L

t

)

t≥0

,

L

0

= 0, is a stochastic process with

càdlàg sample paths having stationary and independent increments. These properties

imply that logE[exp{iyL

t

}] = tlogE[exp{iyL

1

}] for y ∈ R. Consequently, since

ψ

L

(y) B logE

h

e

iyL

1

i

= iyb −

1

2

c

2

y

2

+

Z

R

(e

iyx

−1 −iyx1

{|x|≤1}

)F(dx), y ∈ R,

for some

b ∈ R

,

c

2

≥

0 and Lévy measure

F

by the Lévy–Khintchine formula, the

distribution of (

L

t

)

t≥0

may be summarized as a triplet (

b, c

2

,F

). We extend (

L

t

)

t≥0

to

a two-sided Lévy process (

L

t

)

t∈R

by setting

L

t

=

−

˜

L

(−t)−

for

t <

0, where (

˜

L

t

)

t≥0

is an

independent copy of (

L

t

)

t≥0

. When

E

[

|L

1

|

]

< ∞

, or equivalently

R

|x|>1

|x|F

(

dx

)

< ∞

, we

let

¯

L

t

= L

t

−tE[L

1

], t ∈ R, denote the centered version of (L

t

)

t∈R

.

For a measurable function

g : R → R

, which vanishes on (

−∞,

0), necessary and

sufficient conditions on (b,c

2

,F, g) for the Lévy-driven moving average

X

t

=

Z

t

−∞

g(t −u) dL

u

, t ∈ R, (1.6)

to exist (as limits in probability of integrals of simple functions) are given in [31,

Theorem 2.7]. It follows as well from [31] that the finite dimensional distributions of

(X

t

)

t∈R

are characterized in terms of (b,c

2

,F, g) by the relation

logE

h

e

i(y

1

X

t

1

+···+y

n

X

t

n

)

i

=

Z

R

ψ

L

(y

1

g(t

1

+ u)+ ···+ y

n

g(t

n

+ u)) du,

which holds for any

n ∈ N

and

t

1

,y

1

,... , t

n

,y

n

∈ R

. One immediate consequence of

this relation is that (

X

t

)

t∈R

is a stationary and infinitely divisible stochastic process

(the finite dimensional distributions of (

X

t+h

)

t∈R

are infinitely divisible and do not

depend on

h

). Note that, in contrast to

(1.5)

, (

X

t

)

t∈R

given by

(1.6)

needs not satisfy

(1.1)

, e.g., it may allow for a heavy-tailed marginal distribution. For instance, if

L

1

has a symmetric

α

-stable distribution for some

α ∈

(0

,

2), then

(1.6)

is well-defined

if and only if

g ∈L

α

, in which case the distribution of

X

0

is also symmetric

α

-stable

([33, Propositions 6.2.1–6.2.2]). In particular, for

p ∈

(0

,∞

) it holds that

E

[

|X

0

|

p

]

< ∞

if and only if

p < α

([33, Property 1.2.16]). While the class of Lévy-driven moving

averages is rather large, it should be pointed out that more general specifications of

stationary infinitely divisible processes, such as mixed moving averages (in particular,

superpositions of Ornstein–Uhlenbeck processes) and Lévy semistationary processes

have also received some attention in the literature; see [3, 4] for details.

The path properties of (

X

t

)

t∈R

are very much related to those of

g

, and for details

beyond the following discussion we refer to [32]. A fundamental question to ask

3

Introduction

is when (

X

t

)

t≥0

is a semimartingale (with respect to a suitable filtration). Indeed,

Delbaen and Schachermayer [18] argue that the semimartingale property is desirable

when modeling financial markets, and by Bichteler–Dellacherie theorem it is nec-

essary and sufficient that (

X

t

)

t≥0

is a semimartingale if it is supposed to serve as a

“good” integrator (see [10, Theorem 7.6] and [19] for precise statements). Under rather

mild conditions on the driving Lévy process (

L

t

)

t∈R

, [7, Corollary 4.8] provides a

complete characterization of the semimartingale property within the moving average

framework (1.6):

Theorem 1.2 (Basse-O’Connor and Rosiński [7]).

Suppose that (

L

t

)

t∈R

has sample

paths of locally unbounded variation and that either

x 7→ F

((

−x, x

)

c

) is regularly varying

at

∞

of index

β ∈

[

−

2

,−

1) or

R

|x|>1

x

2

F

(

dx

)

< ∞

. Then (

X

t

)

t≥0

defined as in

(1.6)

is a

semimartingale with respect to the least filtration (

F

t

)

t≥0

satisfying the usual conditions

and

σ(L

s

: s ≤ t) ⊆F

t

, t ≥ 0,

if and only if g is absolutely continuous on [0, ∞) with a density g

0

satisfying

Z

∞

0

c

2

g

0

(t)

2

+

Z

R

|xg

0

(t)|∧|xg

0

(t)|

2

F(dx)

dt < ∞. (1.7)

Furthermore, if (1.7) is satisfied, (X

t

)

t≥0

admits the semimartingale decomposition

X

t

= X

0

+ g(0)

¯

L

t

+

Z

t

0

Z

s

−∞

g

0

(s −u) d

¯

L

u

ds, t ≥ 0. (1.8)

If Theorem 1.2 is applicable we have that

E

[

|L

1

|

]

< ∞

, and it follows that (

X

t

)

t≥0

can be decomposed into a sum of a martingale and an absolutely continuous stochastic

process (in fact, this implies that (

X

t

)

t≥0

is a so-called special semimartingale as

defined in [26, Definition 4.21]). Sometimes, such as when pricing derivatives or

fixed income securities in a financial market driven by semimartingales, it might be

important to know if the latter term can be absorbed by a suitable equivalent change

of measure. To be precise, for a given

T ∈

(0

,∞

) one asks if there is a probability

measure Q on F

T

such that:

(i) For all A ∈ F

T

, Q(A) > 0 if and only if P(A) > 0.

(ii) Under Q, (X

t

)

t∈[0,T ]

is a local martingale with respect to (F

t

)

t∈[0,T ]

.

Such

Q

is referred to as an equivalent local martingale measure (ELMM) for (

X

t

)

t∈[0,T ]

. It

should be mentioned that equivalent or, more generally, absolutely continuous change

of measure for some stochastic processes (such as Markov processes and solutions

to certain stochastic differential equations) is well-studied; see the introduction of

Paper A for references. While it might be tempting to require that

T

=

∞

(with

F

∞

=

W

t≥0

F

t

), this is a rather serious restriction. As an example, the probability

measures induced by two homogeneous Poisson processes with different intensities

(on the space

D

([0

,∞

)) equipped with the Skorokhod topology) are equivalent on

F

T

for any

T ∈

(0

,∞

) but singular on

F

∞

, cf. [17, Remark 9.2]. The intuition is that when

one has an infinite horizon, the intensity can be estimated almost surely from the

Poisson process. Consequently, we will return to the question of the existence of an

ELMM for (X

t

)

t∈[0,T ]

when fixing T ∈ (0,∞).

4

1 · A Wold–Karhunen type decomposition and the Lévy-driven moving averages

Recall that it is a prerequisite that (

X

t

)

t∈[0,T ]

is a semimartingale in order to admit

an ELMM ([26, Theorem 3.13 (Chapter III)]). This means that if (

L

t

)

t∈R

is a Lévy

process satisfying the assumptions of Theorem 1.2, the conditions imposed on

g

in

this theorem are necessary. Except in trivial cases it must also be the case that

g

(0)

,

0;

indeed, if g(0) = 0 and an ELMM Q exists, the representation (1.8) shows that

Z

t

0

Z

s

−∞

g

0

(s −u) d

¯

L

u

ds, t ∈ [0,T ],

is a local martingale under

Q

, and hence it must be identically equal to zero ([20,

Theorem 3.3 (Section 2)]). If the distribution of

L

1

is not degenerate, this happens

only if

g

is vanishing almost everywhere. On the other hand, Cheridito [14] showed

that if (

L

t

)

t∈R

is a Brownian motion (that is,

c

2

>

0 and

F ≡

0), the condition

g

(0)

,

0

combined with the assumptions of Theorem 1.2 are also sufficient for the existence of

an ELMM. The main purpose of Paper A has been to establish conditions ensuring

that (X

t

)

t∈[0,T ]

admits an ELMM beyond the Gaussian setting.

1.1 Paper A

Inspired by the structure of (

X

t

)

t∈[0,T ]

in

(1.8)

, this paper investigates when an ELMM

exists for semimartingales of the form

˜

X

t

= L

t

+

Z

t

0

Y

s

ds, t ∈ [0,T ],

under the assumption that (

Y

t

)

t∈[0,T ]

is a predictable process such that

R

T

0

|Y

t

| dt < ∞

almost surely and

E

[

|L

1

|

]

< ∞

. In Theorem 2.1 (Paper A) we give rather explicit suffi-

cient conditions for (

˜

X

t

)

t∈[0,T ]

to admit an ELMM. Specifically, each of the following

two statements is sufficient:

(i)

The collection (

Y

t

)

t∈[0,T ]

is tight, each

Y

t

is infinitely divisible and the corre-

sponding Lévy measures (

F

t

)

t∈[0,T ]

meet

sup

t∈[0,T ]

F

t

([

−K,K

]

c

) = 0 for some

K >

0. Moreover, the Lévy measure F of (L

t

)

t∈[0,T ]

satisfies F((−∞,0)),F((0,∞)) > 0.

(ii)

The Lévy measure

F

of (

L

t

)

t∈[0,T ]

satisfies

F

((

−∞,−K

])

,F

([

K,∞

))

>

0 for all

K >

0.

The somewhat canonical example of a process (

Y

t

)

t∈[0,T ]

satisfying (i) is a stationary

and infinitely divisible process where the Lévy measure of

Y

0

is compactly supported.

More concretely, it could be a moving average with a bounded kernel driven by a Lévy

process with a compactly supported Lévy measure. Loosely speaking, (ii) states that

no further assumptions on (

Y

t

)

t∈[0,T ]

are needed as long as (

L

t

)

t∈[0,T ]

can have jumps

of arbitrarily large positive and negative size. As an almost immediate consequence

of these findings and Theorem 1.2 above, we obtain a quite general result on the

existence of an ELMM for (

X

t

)

t∈[0,T ]

given by

(1.6)

; see Theorem 1.2 of Paper A for

details. Among other things, this result implies that if (

L

t

)

t∈R

is a symmetric

α

-stable

Lévy process for some

α ∈

(1

,

2], then there exists an ELMM for (

X

t

)

t∈[0,T ]

if and only

if

g

(0)

,

0 and

g

is absolutely continuous on [0

,∞

) with a density

g

0

which belongs

L

α

(cf. Corollary 1.3 of Paper A). Consequently, this result provides a natural extension

of the Gaussian setup studied in [14].

5

Introduction

It should be stressed that the techniques used in [14] cannot be transferred into

the non-Gaussian setting that we consider in this paper. Specifically, his proof relies

on a localized version of the Novikov condition by showing that

E

exp

1

2

Z

t

s

Y

2

u

du

< ∞ (1.9)

as long as

t −s ∈

(0

,δ

) for a

δ >

0 sufficiently small. While this can be verified in

a Gaussian setup, such a requirement is rarely satisfied in other situations. In fact,

if

R

t

s

Y

u

du

is infinitely divisible with a non-trivial Lévy measure,

(1.9)

will never

be satisfied ([34, Theorem 26.1]). The conditions (i)–(ii) above are instead results

of two alternative and very different techniques. Indeed, (i) makes use of a general

predictable criterion of Lépingle and Mémin [29], and (ii) is obtained by carefully

constructing

Q

so that it changes the distribution of the large jumps of (

L

t

)

t∈[0,T ]

, but

leaves the jump intensity constant and thereby avoiding finite explosion times.

2 Dynamic models for Lévy-driven moving averages

While the Lévy-driven moving averages define a rather flexible and tractable class of

stationary continuous-time processes, we are still left with the question: What are rea-

sonable choices of the kernel

g

? It may be desirable to choose

g

so that (

X

t

)

t∈R

exhibits a

certain autoregressive (dynamic) behavior. Since autoregressive and moving average

representations have different advantages, one would often aim at getting parsimo-

nious representations in both domains without losing too much flexibility—e.g., in

terms of possible autocovariances or, equivalently, spectral distributions that can be

generated by the model.

Motivation: To make the above discussion more concrete, let us take a step back and

consider the discrete-time equations

Y

t

=

∞

X

j=0

ψ

j

ε

t−j

and

∞

X

j=0

π

j

Y

t−j

= ε

t

, t ∈ Z, (2.1)

for suitable sequences of coefficients (

ψ

t

)

t∈N

0

and (

π

t

)

t∈N

0

, and an i.i.d. noise (

ε

t

)

t∈Z

.

Some choices of (

ψ

t

)

t∈N

0

lead to a stationary moving average (

Y

t

)

t∈Z

, defined by

the first equation of

(2.1)

, which satisfies the second equation of

(2.1)

for a suitable

choice of (

π

t

)

t∈N

0

. Conversely, for some choices of (

π

t

)

t∈N

0

the second equation of

(2.1)

has a unique stationary solution given by the first equation of

(2.1)

with a

suitably chosen sequence (

ψ

t

)

t∈N

0

. We will refer to the first and second equation of

(2.1)

as a moving average representation and an autoregressive representation of (

Y

t

)

t∈Z

,

respectively. While a moving average representation is convenient for assessing

several distributional properties of (

Y

t

)

t∈Z

, an autoregressive representation provides

a lot of valuable insight concerning the dynamic behavior of (

Y

t

)

t∈Z

; e.g., it can be

used for prediction and estimation purposes, to simulate sample paths or to filter out

the noise (ε

t

)

t∈Z

from the observed process (Y

t

)

t∈Z

.

There is no guarantee that a simple moving average representation leads to a

particularly simple autoregressive representation and vice versa. However, an ex-

tremely popular modeling class in discrete time, which allows for rather tractable

6

2 · Dynamic models for Lévy-driven moving averages

representations in both domains, consists of the causal and invertible ARMA pro-

cesses. Specifically, given two real polynomials

P

and

Q

with no zeroes on the unit

disc

D B {z ∈ C

:

|z| ≤

1

}

, the corresponding ARMA process (

Y

t

)

t∈Z

is the unique

stationary solution to the linear difference equation

P (B)Y

t

= Q(B)ε

t

, t ∈ Z. (2.2)

Here

B

denotes the backward shift operator. In this case, (

ψ

t

)

t∈N

0

and (

π

t

)

t∈N

0

corre-

spond to the coefficients in the power series expansion on

D

of the rational functions

Q/P

and

P /Q

, respectively. The difficulty of computing the coefficients depends ulti-

mately on the denominator polynomial, and hence there is a tradeoff between the

simplicity of the moving average and the autoregressive specification. One advantage

of the ARMA framework, however, is that the coefficients can always be obtained by

relying on simple properties of the geometric series and, possibly, a partial fraction

decomposition. An easy example is the AR(1) process where

P

(

z

) = 1

−αz

for some

α ∈

(

−

1

,

1) and

Q ≡

1. In this case

π

0

= 1,

π

1

=

−α

and

π

j

= 0 for

j ≥

2, while

ψ

j

=

α

j

for all

j ≥

0. There exists a vast amount of literature related to ARMA processes and

various extensions. For further details, see [11, 25].

Continuous-time ARMA equations: Since the coefficients in the moving average

representation of the discrete-time AR(1) process take a geometric form, the con-

tinuous-time equivalent is naturally

g

(

t

) =

e

−λt

for

t ≥

0 and a given

λ >

0. The

corresponding process (

X

t

)

t∈R

given by

(1.6)

, known as the Ornstein–Uhlenbeck pro-

cess, is perhaps the most well-studied Lévy-driven moving average of all time, and it

can be characterized as the unique stationary solution to the stochastic differential

equation

X

t

−X

s

= −λ

Z

t

s

X

u

du + L

t

−L

s

, s < t. (2.3)

Ornstein–Uhlenbeck processes enjoy many properties: they are Markovian, their

possible one-dimensional marginal laws coincide with the self-decomposable distri-

butions and a sampled Ornstein–Uhlenbeck process (

X

t∆

)

t∈Z

is an AR(1) process for

any

∆ >

0. For details about Ornstein–Uhlenbeck processes and further references,

see Section 1 of Paper B.

Defining formally the derivatives (

DX

t

)

t∈R

and (

DL

t

)

t∈R

of (

X

t

)

t∈R

and (

L

t

)

t∈R

,

respectively,

(2.3)

reads (

D

+

λ

)

X

t

=

DL

t

for

t ∈ R

. In light of this equation and

(2.2)

it

makes sense to view a process (

X

t

)

t∈R

as a continuous-time ARMA (CARMA) process

if it is stationary and satisfies the formal equation

P (D)X

t

= Q(D)DL

t

, t ∈ R, (2.4)

for two real polynomials

P

and

Q

. Although the derivatives on the right-hand side

will not be well-defined in the usual sense (except in trivial cases), (

X

t

)

t∈R

is defined

rigorously through its corresponding moving average representation. Specifically, by

assuming that

p

:=

deg

(

P

) and

q

:=

deg

(

Q

) satisfy

p > q

and that

P

has no zeroes on

{z ∈ C

:

Re

(

z

)

≥

0

}

, there exists a function

g : R → R

which vanishes on (

−∞,

0) and

has Fourier transform

F [g](y) =

Q(iy)

P (iy)

, y ∈ R.

7

Introduction

As for the ARMA processes, the rational form of the Fourier transform ensures

that one can compute

g

explicitly by relying on the fact that

t 7→ 1

[0,∞)

(

t

)

e

−λt

has

Fourier transform

y 7→

(

iy

+

λ

)

−1

for any

λ >

0. This construction ensures that

g

is

absolutely continuous on [0

,∞

) and decays exponentially fast at

∞

, and hence the

causal CARMA(

p, q

) process with polynomials

P

and

Q

can be rigorously defined

as the moving average

(1.6)

with kernel

g

as long as

E

[

log

+

|L

1

|

]

< ∞

. On a heuristic

level, one can apply the Fourier transform on both sides of the equation

(2.4)

and

rearrange terms in order to reach the conclusion that a CARMA process should have

such a moving average representation. For applications and properties of the CARMA

process as well as details about its definition, see Sections 1 and 4.3 of Paper D and

references therein.

Continuous-time autoregressive representations: To sum up, the continuous-time ver-

sion of the moving average representation in

(2.1)

is the Lévy-driven moving average

(1.6)

, and the ARMA equation

(2.2)

may naturally be interpreted as

(2.4)

, which in

turn leads to the CARMA processes that have a fairly tractable kernel

g

. Still, when

comparing to the discrete-time setup, some questions arise immediately:

(i) What is an autoregressive representation in continuous time?

(ii) Which types of moving averages admit such a representation?

(iii)

Does the CARMA process admit an autoregressive representation and is it particu-

larly simple?

Suppose that

E

[

L

1

] = 0 and

E

[

L

2

1

]

< ∞

. For a process (

X

t

)

t∈R

with

E

[

X

0

] = 0 and

E

[

X

2

t

]

< ∞

to admit an autoregressive representation it seems reasonable to require

that

sp{X

u

: u ≤ t} ⊇ sp{L

t

−L

s

: s ≤ t}, t ∈ R. (2.5)

When (

X

t

)

t∈R

is of the moving average form

(1.6)

for some

g ∈L

2

which is vanishing

on (

−∞,

0), the reverse inclusion of

(2.5)

is always satisfied and equality holds if

and only if

F

[

g

] is a so-called outer function ([21, pp. 94–95]). While there exist

conditions ensuring that a function is outer, these are often not easy to check and,

more importantly, in many situations the recipe for going from (

X

u

)

u≤t

to

L

t

−L

s

is not

clear. Instead, we take the opposite standpoint and define a class of processes by an

autoregressive type of equations, such that this transition is simple and transparent.

Of course, then we need to argue that it contains a sufficiently wide class of mov-

ing averages—ideally, to align with the discrete-time representations, the invertible

CARMA processes should form a particularly nice subclass. The relation between

this class of autoregressions and moving averages should be somewhat as depicted in

Figure 1.

The class of interest will be solutions to the so-called stochastic delay differen-

tial equations (SDDEs), which in the simplest case (univariate, first order and non-

fractional) are of the form

X

t

−X

s

=

Z

t

s

Z

[0,∞)

X

u−v

η(dv) du + L

t

−L

s

, s < t. (2.6)

Here

η

is a finite signed measure and (

X

t

)

t∈R

is a measurable process such that the

integral in

(2.6)

is well-defined almost surely for each

s < t

. Among other things, the

8

2 · Dynamic models for Lévy-driven moving averages

Autoregressions

Moving Averages

CARMA

Figure 1:

Invertible and causal CARMA processes being a strict subset of processes which both admit an

autoregressive representation and a moving average representation.

purpose of Papers B–E and G has been to address each of the questions (i)–(iii) in

frameworks related to

(2.6)

and show that many properties of the solutions are akin to

those of discrete-time autoregressions. Depending on the paper, different assumptions

are put on (

X

t

)

t∈R

in order to ensure that the integral in

(2.6)

is well-defined. For

now let us just remark that each of the following three conditions is sufficient: (i)

η

is

compactly supported and

t 7→ X

t

is càdlàg, (ii) (

X

t

)

t∈R

is stationary and

E

[

|X

0

|

]

< ∞

,

and (iii) (X

t

)

t∈R

has stationary increments, E[|X

t

|] < ∞ for all t and

R

[0,∞)

t |η|(dt) < ∞

(the latter condition is due to [5, Corollary A.3]). One of the simplest SDDEs is the

Ornstein–Uhlenbeck equation

(2.3)

, which corresponds to

η

=

−λδ

0

with

δ

0

being

the Dirac measure at 0. The literature has primarily focused on the case where

η

is

compactly supported (cf. [24, 30]), but as we shall see in Paper D, this restriction

unfortunately rules out the possibility of representing CARMA processes with a

non-trivial moving average polynomial as solutions to SDDEs. To the best of our

knowledge, SDDEs have historically not been viewed as continuous-time equivalents

to discrete-time autoregressive representations, and hence questions such as (i)–(iii)

have not been raised.

Before jumping into technical descriptions of the attached papers on SDDEs,

we will briefly comment on their scopes. Papers B and D address existence and

uniqueness of stationary solutions to

(2.6)

, also when the noise is much more general

than (

L

t

)

t∈R

, and in Paper D the results are shown to hold true in a multidimensional

and higher order setting as well. Moreover, Paper E defines a large class of fractional

delays which all give rise to stationary solutions that are semimartingales and have

hyperbolically decaying autocovariance functions. While the equations considered

in this paper do indeed take the form

(2.6)

in special cases, the general framework

is different and specifically tailored for producing long-memory processes. Finally,

Paper G studies existence and uniqueness of solutions which are not necessarily

stationary, but have stationary increments, in the same type of multivariate setting as

in Paper D, and it characterizes the space of the corresponding cointegration vectors.

In general, the papers draw clear parallels to well-known discrete-time models such

as the fractionally integrated ARMA model and the cointegrated VAR model.

Besides whether we consider a univariate or multivariate version of

(2.6)

, there is

another factor discriminating the papers: to find solutions to

(2.6)

using Papers B–D

we must have that

η

([0

,∞

))

,

0, while Papers E and G sometimes apply in cases

where

η

([0

,∞

)) = 0. The condition

η

([0

,∞

)) = 0 corresponds to the autoregressive

polynomial having a zero at

z

= 1 in a discrete-time setting, and it is closely related

9

Introduction

to memory and stationarity properties of the solution. Table 1 gives an overview of

the focus in each of the five papers on SDDEs.

Table 1: An overview of the five papers on SDDEs.

Univariate Multivariate

η([0,∞)) , 0 B, C D

η([0,∞)) = 0 E G

2.1 Papers B and D

Papers B and D are very much related in the sense that the latter extends the former

to a multivariate framework, and questions such as existence and uniqueness of

stationary solutions are addressed in both papers. Despite this, they still have fairly

different aims:

(i)

Paper B also contains a study of an alternative type of autoregressive represen-

tation than the SDDE and many examples are provided.

(ii)

Paper D is generally more technical and is also concerned with representations

of solutions, prediction formulas, higher order SDDEs and their relation to

invertible CARMA processes.

Here we will briefly discuss the main findings of the two papers, but only formulate

them in the univariate setting. The multivariate extension is more demanding from

a notational point of view and, thus, we refer to Paper D for further details. The

majority of the proofs in Papers B and D rely on the idea of rephrasing the problems

in the frequency domain and then exploiting key results from harmonic analysis,

such as certain Paley–Wiener theorems and characterizations of Hardy spaces, to

establish the existence of the appropriate functions.

The equation of interest is

(2.6)

with a more general noise and of higher order,

namely

X

(m−1)

t

−X

(m−1)

s

=

m−1

X

j=0

Z

t

s

Z

[0,∞)

X

(j)

u−v

η

j

(dv) du + Z

t

−Z

s

, s < t. (2.7)

where (

Z

t

)

t∈R

is a measurable process with stationary increments,

Z

0

= 0 and

E

[

|Z

t

|

]

<

∞

for all

t ∈ R

. Here

m ∈ N

, the measures

η

0

,η

1

,... , η

m−1

are finite and signed, and

(

X

(j)

t

)

t∈R

denotes the

j

th derivative of (

X

t

)

t∈R

with respect to

t

. For convenience, we

will assume that (

Z

t

)

t∈R

is a regular integrator in the sense of Proposition 4.1 (Paper D).

For now it suffices to know that a regular integrator ensures that the solutions we

construct can be expressed as moving averages and that Lévy processes, fractional

Lévy processes and many semimartingales with stationary increments are regular

integrators. It should be stressed that existence and uniqueness of solutions to

(2.7)

can still be obtained when (

Z

t

)

t∈R

is not a regular integrator; see Theorem 2.5 of

Paper B and Theorem 3.1 of Paper D for the case m = 1.

As discussed in relation to Table 1, we need to impose conditions ensuring that

P

m−1

j=0

η

j

([0

,∞

))

,

0 in order to prove existence and uniqueness of stationary solutions

10

2 · Dynamic models for Lévy-driven moving averages

to

(2.7)

. Specifically, it is assumed that

R

[0,∞)

t

2

|η

j

|

(

dt

)

< ∞

for

j

= 0

,

1

,... , m −

1 and

that the equation

h

η

(z) := z

m

−

m−1

X

j=0

z

j

Z

[0,∞)

e

−zt

η

j

(dt) = 0 (2.8)

has no solutions on the imaginary axis

{z ∈ C

:

Re

(

z

) = 0

}

. Here

|η

j

|

denotes the

variation of

η

j

. Theorem 4.5 (Paper D) states that, under these assumptions, the

unique stationary solution to (2.7) is given by

X

t

=

Z

R

g(t −u) dZ

u

, t ∈ R. (2.9)

where

g : R → R

can be characterized through its Fourier transform as

F

[

g

](

y

) =

h

η

(

iy

)

−1

for

y ∈ R

. Note that

F

[

g

] is well-defined due to the imposed assumption

on

h

η

. Here uniqueness means that for any other measurable and stationary process

(

X

t

)

t∈R

which has

E

[

|X

0

|

]

< ∞

and satisfies

(2.7)

, the equality in

(2.9)

holds true

almost surely for each t ∈ R. It follows that (X

t

)

t∈R

is a backward moving average of

the form

(1.5)

if

g

is vanishing on (

−∞,

0) almost everywhere, and this is the case if

the equation in (2.8) has no solutions on {z ∈ C : Re(z) ≥ 0}.

The last result addressed here concerns the possibility of representing CARMA

processes as unique solutions to certain SDDEs. Hence, we consider any two real and

monic polynomials

P

and

Q

with corresponding degrees

p > q

, and we assume that

P

has no zeroes in

{z ∈ C

:

Re

(

z

) = 0

}

and does not share any zeroes with

Q

. Moreover, we

let (

X

t

)

t∈R

be given by

(2.9)

with

F

[

g

](

y

) =

Q

(

iy

)

/P

(

iy

) for

y ∈ R

. This setup covers in

particular the causal Lévy-driven CARMA process introduced above when

E

[

|L

1

|

]

< ∞

,

but also more general CARMA frameworks as discussed in Section 4.3 (Paper D). In

line with discrete-time ARMA processes we need an invertibility assumption in order

to obtain an autoregressive representation, and this amounts in turn to assuming that

the zeroes of

Q

do not belong to

{z ∈ C

:

Re

(

z

)

≥

0

}

. Note that this is exactly what is

needed for

g

to be outer (see [21, Exercise 2 (Section 2.7)]), which is necessary and

sufficient for

(2.5)

to hold when

E

[

L

1

] = 0 and

E

[

L

2

1

]

< ∞

. While the rational function

P /Q

was the key ingredient in order to obtain an autoregressive representation of

ARMA processes in a discrete-time setup, the continuous-time SDDE setup requires

a decomposition of P . Specifically, we decompose P as

P = QR + S,

where

R

and

S

are polynomials such that

deg

(

R

) =

p −q

and

deg

(

S

)

< q

(

S ≡

0 if

q

= 0).

Such a decomposition is unique and can be obtained using polynomial long division.

Set m = p −q and write

R(z) = z

m

−c

m−1

z

m−1

−···−c

0

, z ∈ C,

for suitable

c

0

,... , c

m−1

∈ R

. The essence of Theorem 4.8 (Paper D) is that (

X

t

)

t∈R

is

the unique stationary solution to (2.7) when

η

0

(dt) = c

0

δ

0

(dt) + f (t) dt and η

j

= c

j

δ

0

, j = 1,..., m −1, (2.10)

where

f : R → R

is vanishing on (

−∞,

0) and characterized by

F

[

f

](

y

) =

S

(

iy

)

/Q

(

iy

)

for

y ∈ R

. One should notice here that, similarly to computing the coefficients in the

11

Introduction

autoregressive representation of an ARMA process, writing up the SDDE associated

to a particular CARMA process reduces to finding a function with a certain rational

Fourier transform.

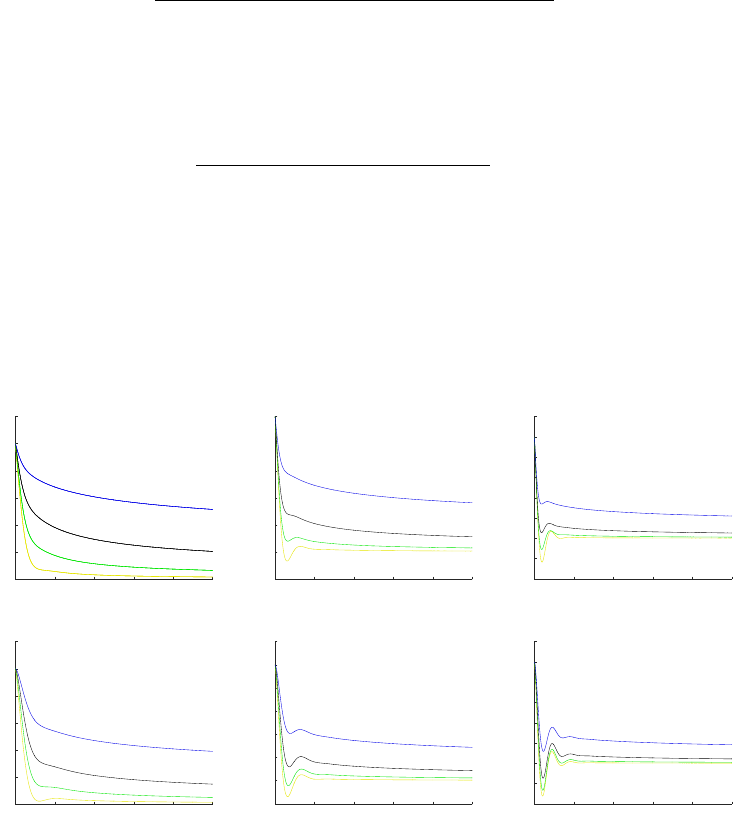

2.2 Paper C

Inspired by the study of Brockwell et al. [12], the purpose of this paper is to carry

out a simulation study, which is designed to check the possibility of using SDDEs

to filter out (or recover) the noise process from an observed invertible Lévy-driven

CARMA(2

,

1) process (

X

t

)

t∈R

. Specifically, the results of Papers B and D ensure the

existence of α,β ∈ R and γ > 0 such that

dX

t

= αX

t

dt + β

Z

∞

0

e

−γu

X

t−u

du dt + dL

t

, t ∈ R, (2.11)

so by observing (

X

t

)

t∈R

on a sufficiently fine grid the distribution of

L

1

is estimated

by discretizing

(2.11)

. Before this step we estimate the vector (

α,β,γ

) of parameters

by a least squares approach. We refer to Sections 3 and 4 (in particular, Figures 2

and 3) of Paper C for further details.

2.3 Paper E

This paper is concerned with the question of incorporating long memory into the

solutions of equations of a similar type as the SDDE in

(2.6)

when

E

[

L

1

] = 0 and

E

[

L

2

1

] = 1. The notion of long memory refers in this context to a certain asymptotic

behavior of either the autocovariance function

γ

X

or, if it exists, the spectral density

f

X

of the solution (X

t

)

t∈R

, namely that

γ

X

(h) ∼ αh

2β−1

as h → ∞ or f

X

(y) ∼ α|y|

−2β

as y → 0 (2.12)

for some

α >

0 and

β ∈

(0

,

1

/

2). Here, and in what follows, we use the notation

f

(

t

)

∼ g

(

t

) for two functions

f ,g : R → C

to indicate that

f

(

t

)

/g

(

t

)

→

1 for

t

tending

to some specified limit. By a Tauberian argument, the two conditions in

(2.12)

are

equivalent under suitable regularity conditions. Recall that, under the assumptions

of Papers B and D, the unique solution to

(2.6)

is a moving average driven by (

L

t

)

t∈R

with a kernel

g

satisfying

F

[

g

](

y

) = (

iy −F

[

η

](

y

))

−1

for

y ∈ R

. It is not too difficult

to verify that

g ∈ L

1

∩L

2

(Lemma 2.2 of Paper B) and

f

X

(

y

) = (2

π

)

−1

|iy −F

[

η

](

y

)

|

−2

(Plancherel’s theorem), and hence the solution does not possess any of the properties

in (2.12).

The general equation considered in Paper E is

X

t

−X

s

=

Z

t

−∞

D

β

−

1

(s,t]

(u)

Z

[0,∞)

X

u−v

η(dv) du + L

t

−L

s

, s < t, (2.13)

where

D

β

−

1

(s,t]

(u) =

1

Γ (1 −β)

h

(t −u)

−β

+

−(s −u)

−β

+

i

, u ∈ R,

is the right-sided Riemann–Liouville fractional derivative of the indicator function

1

(s,t]

. While solutions to

(2.13)

may indeed be viewed as solutions to

(2.6)

in some

12

2 · Dynamic models for Lévy-driven moving averages

cases (see Example 4.5 of Paper E),

(2.13)

is generally better suited for studying long-

memory processes. To motivate this statement, note that while both

(2.6)

and

(2.13)

can be written as

X

t

−X

s

=

Z

∞

0

X

t−u

µ

t−s

(du) + L

t

−L

s

, s < t, (2.14)

for a suitable family of finite measures (

µ

h

)

h>0

, it can be checked that, as

y →

0 and for

each

h >

0,

F

[

µ

h

](

y

)

∼ hη

([0

,∞

)) in the former case and

F

[

µ

h

](

y

)

∼ hη

([0

,∞

))(

iy

)

β

in

the latter case. When also keeping in mind that the autoregressive coefficients (

π

j

)

j∈N

0

of discrete-time fractional (ARFIMA type) processes satisfy

P

∞

j=0

π

j

e

−ijy

∼ α

(

iy

)

β

as

y →

0 for some

α >

0 (see, e.g., [11, Section 13.2]), this should indicate that

(2.13)

might be well-suited for the construction of long-memory processes.

In order to show existence and uniqueness of solutions to

(2.13)

it is assumed that

R

[0,∞)

t |η|(dt) < ∞ and that the equation

h

η,β

(z) := z

1−β

−

Z

[0,∞)

e

−zt

η(dt) = 0

has no solution

z ∈ C

with

Re

(

z

)

≥

0. Here we define

z

γ

as

r

γ

e

iγθ

, where

r >

0 and

θ ∈

(

−π, π

] correspond to the polar representation

z

=

re

iθ

of

z ∈ C \{

0

}

. Theorem 3.2

(Paper E) shows that these assumptions are sufficient to ensure that the unique

solution to

(2.13)

is a backward moving average of the form

(1.6)

with

F

[

g

](

y

) =

(

iy

)

−β

h

η,β

(

iy

)

−1

for

y ∈ R

. The notion of uniqueness is, however, weaker than in the

non-fractional setting considered in Papers B and D; it is the only stationary process

(

X

t

)

t∈R

which satisfies

(2.13)

, and which is purely non-deterministic in the sense that

E[X

0

] = 0, E[X

2

0

] < ∞ and

\

t∈R

sp{X

s

: s ≤ t} = {0}.

Note that if

µ

h

((0

,∞

)) = 0 for all

h >

0,

(2.14)

reveals immediately that translations

of solutions remain solutions, and hence we cannot have the same strong type of

uniqueness as in Papers B and D. Proposition 3.7 (Paper E) shows that the model

generates exactly the type of long memory behavior that we asked for in (2.12):

γ

X

(h) ∼

Γ (1 −2β)

Γ (β)Γ (1 −β)η([0,∞))

2

h

2β−1

as h → ∞

and f

X

(y) ∼

1

η([0,∞))

2

|y|

−2β

as y → 0.

An interesting feature of generating long memory processes in this way is that,

in contrast to the long memory models in continuous time which are based on

a fractional noise, the local path properties do not depend on

β

and (

X

t

)

t≥0

is a

semimartingale (see Remarks 3.9 and 3.10 as well as the comment in relation to

Proposition 3.6 of Paper E). Based on the close relation between CARMA processes

and SDDEs with a certain type of delay (cf.

(2.10)

), this subclass is studied in detail

and related to the fractionally integrated CARMA processes introduced in [13].

While the proofs of the paper do indeed make use of some of the same type

of results as in Papers B and D, theory from fractional calculus as well as spectral

representations of stationary processes also play a significant role.

13

Introduction

2.4 Paper G

In Papers B and D it was argued that, under some additional assumptions, a unique

stationary solution to

(2.6)

exists if

η

([0

,∞

))

,

0, and Example 4.5 of Paper E shows

that a stationary solution can sometimes exist even when

F

[

η

](

y

)

∼ α

(

iy

)

β

as

y →

0 for

some

α >

0 and

β ∈

(0

,

1

/

2). But what happens if the convergence

F

[

η

](

y

)

→

0 is fast?

An extreme example is

η ≡

0, where a stationary solution to

(2.6)

cannot exist unless

(

L

t

)

t∈R

is identically zero. A more moderate example could be

η

(

dt

) = (

Df

)(

t

)

dt

with

f ,Df ∈ L

1

. To be able to find solutions in such situations it seems reasonable to allow

that a solution is not stationary, but only has stationary increments. In the literature

([16]), a process with these characteristics is often referred to as being integrated (of

order one).

The purpose of Paper G is to study solutions to SDDEs which are possibly in-

tegrated and, in the multivariate setting, cointegrated. Cointegration refers to the

phenomenon that an

n

-dimensional process (

X

t

)

t∈R

is integrated, but (

β

>

X

t

)

t∈R

is

stationary for some cointegration vector

β ∈ R

n

\{

0

}

. In the paper we prove a Granger

type representation theorem, which characterizes the class of integrated solutions

to SDDEs under appropriate assumptions. This representation reveals in particular

that increments of solutions are uniquely determined, but the possible translations as

well as the number of linearly independent cointegration vectors are tied to the rank

of

η

([0

,∞