Red Hat Enterprise Linux 9.0

Monitoring and managing system status and

performance

Optimizing system throughput, latency, and power consumption

Last Updated: 2024-08-05

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and

performance

Optimizing system throughput, latency, and power consumption

Legal Notice

Copyright © 2024 Red Hat, Inc.

The text of and illustrations in this document are licensed by Red Hat under a Creative Commons

Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is

available at

http://creativecommons.org/licenses/by-sa/3.0/

. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must

provide the URL for the original version.

Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert,

Section 4d of CC-BY-SA to the fullest extent permitted by applicable law.

Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift,

Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States

and other countries.

Linux ® is the registered trademark of Linus Torvalds in the United States and other countries.

Java ® is a registered trademark of Oracle and/or its affiliates.

XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States

and/or other countries.

MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and

other countries.

Node.js ® is an official trademark of Joyent. Red Hat is not formally related to or endorsed by the

official Joyent Node.js open source or commercial project.

The OpenStack ® Word Mark and OpenStack logo are either registered trademarks/service marks

or trademarks/service marks of the OpenStack Foundation, in the United States and other

countries and are used with the OpenStack Foundation's permission. We are not affiliated with,

endorsed or sponsored by the OpenStack Foundation, or the OpenStack community.

All other trademarks are the property of their respective owners.

Abstract

Monitor and optimize the throughput, latency, and power consumption of Red Hat Enterprise Linux

9 in different scenarios.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Table of Contents

PROVIDING FEEDBACK ON RED HAT DOCUMENTATION

CHAPTER 1. GETTING STARTED WITH TUNED

1.1. THE PURPOSE OF TUNED

1.2. TUNED PROFILES

Syntax of profile configuration

1.3. THE DEFAULT TUNED PROFILE

1.4. MERGED TUNED PROFILES

1.5. THE LOCATION OF TUNED PROFILES

1.6. TUNED PROFILES DISTRIBUTED WITH RHEL

1.7. TUNED CPU-PARTITIONING PROFILE

1.8. USING THE TUNED CPU-PARTITIONING PROFILE FOR LOW-LATENCY TUNING

1.9. CUSTOMIZING THE CPU-PARTITIONING TUNED PROFILE

1.10. REAL-TIME TUNED PROFILES DISTRIBUTED WITH RHEL

1.11. STATIC AND DYNAMIC TUNING IN TUNED

1.12. TUNED NO-DAEMON MODE

1.13. INSTALLING AND ENABLING TUNED

1.14. LISTING AVAILABLE TUNED PROFILES

1.15. SETTING A TUNED PROFILE

1.16. USING THE TUNED D-BUS INTERFACE

1.16.1. Using the TuneD D-Bus interface to show available TuneD D-Bus API methods

1.16.2. Using the TuneD D-Bus interface to change the active TuneD profile

1.17. DISABLING TUNED

CHAPTER 2. CUSTOMIZING TUNED PROFILES

2.1. TUNED PROFILES

Syntax of profile configuration

2.2. THE DEFAULT TUNED PROFILE

2.3. MERGED TUNED PROFILES

2.4. THE LOCATION OF TUNED PROFILES

2.5. INHERITANCE BETWEEN TUNED PROFILES

2.6. STATIC AND DYNAMIC TUNING IN TUNED

2.7. TUNED PLUG-INS

Syntax for plug-ins in TuneD profiles

Short plug-in syntax

Conflicting plug-in definitions in a profile

2.8. AVAILABLE TUNED PLUG-INS

Monitoring plug-ins

Tuning plug-ins

2.9. FUNCTIONALITIES OF THE SCHEDULER TUNED PLUGIN

2.10. VARIABLES IN TUNED PROFILES

2.11. BUILT-IN FUNCTIONS IN TUNED PROFILES

2.12. BUILT-IN FUNCTIONS AVAILABLE IN TUNED PROFILES

2.13. CREATING NEW TUNED PROFILES

2.14. MODIFYING EXISTING TUNED PROFILES

2.15. SETTING THE DISK SCHEDULER USING TUNED

CHAPTER 3. REVIEWING A SYSTEM BY USING THE TUNA INTERFACE

3.1. INSTALLING THE TUNA TOOL

3.2. VIEWING THE SYSTEM STATUS BY USING THE TUNA TOOL

3.3. TUNING CPUS BY USING THE TUNA TOOL

3.4. TUNING IRQS BY USING THE TUNA TOOL

10

11

11

11

11

12

12

13

13

15

16

17

18

18

19

19

20

21

22

22

23

24

25

25

25

25

26

26

27

27

28

29

29

30

30

30

30

35

40

40

41

42

43

44

47

47

47

48

49

Table of Contents

1

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

CHAPTER 4. MONITORING PERFORMANCE USING RHEL SYSTEM ROLES

4.1. PREPARING A CONTROL NODE AND MANAGED NODES TO USE RHEL SYSTEM ROLES

4.1.1. Preparing a control node on RHEL 9

4.1.2. Preparing a managed node

4.2. INTRODUCTION TO THE METRICS RHEL SYSTEM ROLE

4.3. USING THE METRICS RHEL SYSTEM ROLE TO MONITOR YOUR LOCAL SYSTEM WITH VISUALIZATION

4.4. USING THE METRICS RHEL SYSTEM ROLE TO SET UP A FLEET OF INDIVIDUAL SYSTEMS TO MONITOR

THEMSELVES

4.5. USING THE METRICS RHEL SYSTEM ROLE TO MONITOR A FLEET OF MACHINES CENTRALLY USING

YOUR LOCAL MACHINE

4.6. SETTING UP AUTHENTICATION WHILE MONITORING A SYSTEM BY USING THE METRICS RHEL

SYSTEM ROLE

4.7. USING THE METRICS RHEL SYSTEM ROLE TO CONFIGURE AND ENABLE METRICS COLLECTION FOR

SQL SERVER

4.8. CONFIGURING PMIE WEBHOOKS USING THE METRICS RHEL SYSTEM ROLE

CHAPTER 5. SETTING UP PCP

5.1. OVERVIEW OF PCP

5.2. INSTALLING AND ENABLING PCP

5.3. DEPLOYING A MINIMAL PCP SETUP

5.4. SYSTEM SERVICES AND TOOLS DISTRIBUTED WITH PCP

5.5. PCP DEPLOYMENT ARCHITECTURES

5.6. RECOMMENDED DEPLOYMENT ARCHITECTURE

5.7. SIZING FACTORS

5.8. CONFIGURATION OPTIONS FOR PCP SCALING

5.9. EXAMPLE: ANALYZING THE CENTRALIZED LOGGING DEPLOYMENT

5.10. EXAMPLE: ANALYZING THE FEDERATED SETUP DEPLOYMENT

5.11. ESTABLISHING SECURE PCP CONNECTIONS

5.11.1. Secure PCP connections

5.11.2. Configuring secure connections for PCP collector components

5.11.3. Configuring secure connections for PCP monitoring components

5.12. TROUBLESHOOTING HIGH MEMORY USAGE

CHAPTER 6. LOGGING PERFORMANCE DATA WITH PMLOGGER

6.1. MODIFYING THE PMLOGGER CONFIGURATION FILE WITH PMLOGCONF

6.2. EDITING THE PMLOGGER CONFIGURATION FILE MANUALLY

6.3. ENABLING THE PMLOGGER SERVICE

6.4. SETTING UP A CLIENT SYSTEM FOR METRICS COLLECTION

6.5. SETTING UP A CENTRAL SERVER TO COLLECT DATA

6.6. SYSTEMD UNITS AND PMLOGGER

6.7. REPLAYING THE PCP LOG ARCHIVES WITH PMREP

6.8. ENABLING PCP VERSION 3 ARCHIVES

CHAPTER 7. MONITORING PERFORMANCE WITH PERFORMANCE CO-PILOT

7.1. MONITORING POSTFIX WITH PMDA-POSTFIX

7.2. VISUALLY TRACING PCP LOG ARCHIVES WITH THE PCP CHARTS APPLICATION

7.3. COLLECTING DATA FROM SQL SERVER USING PCP

7.4. GENERATING PCP ARCHIVES FROM SADC ARCHIVES

CHAPTER 8. PERFORMANCE ANALYSIS OF XFS WITH PCP

8.1. INSTALLING XFS PMDA MANUALLY

8.2. EXAMINING XFS PERFORMANCE METRICS WITH PMINFO

8.3. RESETTING XFS PERFORMANCE METRICS WITH PMSTORE

8.4. PCP METRIC GROUPS FOR XFS

51

51

51

53

55

56

57

58

59

60

61

64

64

64

65

66

69

72

72

73

74

74

75

75

75

76

77

80

80

80

81

82

83

84

86

88

90

90

91

93

95

96

96

97

98

99

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

2

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.5. PER-DEVICE PCP METRIC GROUPS FOR XFS

CHAPTER 9. SETTING UP GRAPHICAL REPRESENTATION OF PCP METRICS

9.1. SETTING UP PCP WITH PCP-ZEROCONF

9.2. SETTING UP A GRAFANA-SERVER

9.3. ACCESSING THE GRAFANA WEB UI

9.4. CONFIGURING SECURE CONNECTIONS FOR GRAFANA

9.5. CONFIGURING PCP REDIS

9.6. CONFIGURING SECURE CONNECTIONS FOR PCP REDIS

9.7. CREATING PANELS AND ALERT IN PCP REDIS DATA SOURCE

9.8. ADDING NOTIFICATION CHANNELS FOR ALERTS

9.9. SETTING UP AUTHENTICATION BETWEEN PCP COMPONENTS

9.10. INSTALLING PCP BPFTRACE

9.11. VIEWING THE PCP BPFTRACE SYSTEM ANALYSIS DASHBOARD

9.12. INSTALLING PCP VECTOR

9.13. VIEWING THE PCP VECTOR CHECKLIST

9.14. USING HEATMAPS IN GRAFANA

9.15. TROUBLESHOOTING GRAFANA ISSUES

CHAPTER 10. OPTIMIZING THE SYSTEM PERFORMANCE USING THE WEB CONSOLE

10.1. PERFORMANCE TUNING OPTIONS IN THE WEB CONSOLE

10.2. SETTING A PERFORMANCE PROFILE IN THE WEB CONSOLE

10.3. MONITORING PERFORMANCE ON THE LOCAL SYSTEM BY USING THE WEB CONSOLE

10.4. MONITORING PERFORMANCE ON SEVERAL SYSTEMS BY USING THE WEB CONSOLE AND GRAFANA

CHAPTER 11. SETTING THE DISK SCHEDULER

11.1. AVAILABLE DISK SCHEDULERS

11.2. DIFFERENT DISK SCHEDULERS FOR DIFFERENT USE CASES

11.3. THE DEFAULT DISK SCHEDULER

11.4. DETERMINING THE ACTIVE DISK SCHEDULER

11.5. SETTING THE DISK SCHEDULER USING TUNED

11.6. SETTING THE DISK SCHEDULER USING UDEV RULES

11.7. TEMPORARILY SETTING A SCHEDULER FOR A SPECIFIC DISK

CHAPTER 12. TUNING THE PERFORMANCE OF A SAMBA SERVER

12.1. SETTING THE SMB PROTOCOL VERSION

12.2. TUNING SHARES WITH DIRECTORIES THAT CONTAIN A LARGE NUMBER OF FILES

12.3. SETTINGS THAT CAN HAVE A NEGATIVE PERFORMANCE IMPACT

CHAPTER 13. OPTIMIZING VIRTUAL MACHINE PERFORMANCE

13.1. WHAT INFLUENCES VIRTUAL MACHINE PERFORMANCE

The impact of virtualization on system performance

Reducing VM performance loss

13.2. OPTIMIZING VIRTUAL MACHINE PERFORMANCE BY USING TUNED

13.3. OPTIMIZING LIBVIRT DAEMONS

13.3.1. Types of libvirt daemons

13.3.2. Enabling modular libvirt daemons

13.4. CONFIGURING VIRTUAL MACHINE MEMORY

13.4.1. Adding and removing virtual machine memory by using the web console

13.4.2. Adding and removing virtual machine memory by using the command-line interface

13.4.3. Adding and removing virtual machine memory by using virtio-mem

13.4.3.1. Overview of virtio-mem

13.4.3.2. Configuring memory onlining in virtual machines

100

102

102

102

103

105

106

107

108

110

111

112

113

115

115

117

118

121

121

121

122

124

126

126

127

127

127

128

130

131

132

132

132

133

134

134

134

134

135

136

136

137

138

138

140

142

142

142

Table of Contents

3

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13.4.3.3. Attaching a virtio-mem device to virtual machines

13.4.3.4. Comparison of memory onlining configurations

13.4.4. Additional resources

13.5. OPTIMIZING VIRTUAL MACHINE I/O PERFORMANCE

13.5.1. Tuning block I/O in virtual machines

13.5.2. Disk I/O throttling in virtual machines

13.5.3. Enabling multi-queue virtio-scsi

13.6. OPTIMIZING VIRTUAL MACHINE CPU PERFORMANCE

13.6.1. Adding and removing virtual CPUs by using the command-line interface

13.6.2. Managing virtual CPUs by using the web console

13.6.3. Configuring NUMA in a virtual machine

13.6.4. Sample vCPU performance tuning scenario

13.6.5. Managing kernel same-page merging

13.7. OPTIMIZING VIRTUAL MACHINE NETWORK PERFORMANCE

13.8. VIRTUAL MACHINE PERFORMANCE MONITORING TOOLS

13.9. ADDITIONAL RESOURCES

CHAPTER 14. IMPORTANCE OF POWER MANAGEMENT

14.1. POWER MANAGEMENT BASICS

14.2. AUDIT AND ANALYSIS OVERVIEW

14.3. TOOLS FOR AUDITING

CHAPTER 15. MANAGING POWER CONSUMPTION WITH POWERTOP

15.1. THE PURPOSE OF POWERTOP

15.2. USING POWERTOP

15.2.1. Starting PowerTOP

15.2.2. Calibrating PowerTOP

15.2.3. Setting the measuring interval

15.2.4. Additional resources

15.3. POWERTOP STATISTICS

15.3.1. The Overview tab

15.3.2. The Idle stats tab

15.3.3. The Device stats tab

15.3.4. The Tunables tab

15.3.5. The WakeUp tab

15.4. WHY POWERTOP DOES NOT DISPLAY FREQUENCY STATS VALUES IN SOME INSTANCES

15.5. GENERATING AN HTML OUTPUT

15.6. OPTIMIZING POWER CONSUMPTION

15.6.1. Optimizing power consumption using the powertop service

15.6.2. The powertop2tuned utility

15.6.3. Optimizing power consumption using the powertop2tuned utility

15.6.4. Comparison of powertop.service and powertop2tuned

CHAPTER 16. GETTING STARTED WITH PERF

16.1. INTRODUCTION TO PERF

16.2. INSTALLING PERF

16.3. COMMON PERF COMMANDS

CHAPTER 17. PROFILING CPU USAGE IN REAL TIME WITH PERF TOP

17.1. THE PURPOSE OF PERF TOP

17.2. PROFILING CPU USAGE WITH PERF TOP

17.3. INTERPRETATION OF PERF TOP OUTPUT

17.4. WHY PERF DISPLAYS SOME FUNCTION NAMES AS RAW FUNCTION ADDRESSES

17.5. ENABLING DEBUG AND SOURCE REPOSITORIES

145

149

150

150

150

151

152

153

153

154

156

157

163

165

166

168

169

169

170

171

175

175

175

175

175

176

176

176

176

177

177

177

177

178

179

179

179

179

179

180

181

181

181

181

183

183

183

184

184

184

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

4

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17.6. GETTING DEBUGINFO PACKAGES FOR AN APPLICATION OR LIBRARY USING GDB

CHAPTER 18. COUNTING EVENTS DURING PROCESS EXECUTION WITH PERF STAT

18.1. THE PURPOSE OF PERF STAT

18.2. COUNTING EVENTS WITH PERF STAT

18.3. INTERPRETATION OF PERF STAT OUTPUT

18.4. ATTACHING PERF STAT TO A RUNNING PROCESS

CHAPTER 19. RECORDING AND ANALYZING PERFORMANCE PROFILES WITH PERF

19.1. THE PURPOSE OF PERF RECORD

19.2. RECORDING A PERFORMANCE PROFILE WITHOUT ROOT ACCESS

19.3. RECORDING A PERFORMANCE PROFILE WITH ROOT ACCESS

19.4. RECORDING A PERFORMANCE PROFILE IN PER-CPU MODE

19.5. CAPTURING CALL GRAPH DATA WITH PERF RECORD

19.6. ANALYZING PERF.DATA WITH PERF REPORT

19.7. INTERPRETATION OF PERF REPORT OUTPUT

19.8. GENERATING A PERF.DATA FILE THAT IS READABLE ON A DIFFERENT DEVICE

19.9. ANALYZING A PERF.DATA FILE THAT WAS CREATED ON A DIFFERENT DEVICE

19.10. WHY PERF DISPLAYS SOME FUNCTION NAMES AS RAW FUNCTION ADDRESSES

19.11. ENABLING DEBUG AND SOURCE REPOSITORIES

19.12. GETTING DEBUGINFO PACKAGES FOR AN APPLICATION OR LIBRARY USING GDB

CHAPTER 20. INVESTIGATING BUSY CPUS WITH PERF

20.1. DISPLAYING WHICH CPU EVENTS WERE COUNTED ON WITH PERF STAT

20.2. DISPLAYING WHICH CPU SAMPLES WERE TAKEN ON WITH PERF REPORT

20.3. DISPLAYING SPECIFIC CPUS DURING PROFILING WITH PERF TOP

20.4. MONITORING SPECIFIC CPUS WITH PERF RECORD AND PERF REPORT

CHAPTER 21. MONITORING APPLICATION PERFORMANCE WITH PERF

21.1. ATTACHING PERF RECORD TO A RUNNING PROCESS

21.2. CAPTURING CALL GRAPH DATA WITH PERF RECORD

21.3. ANALYZING PERF.DATA WITH PERF REPORT

CHAPTER 22. CREATING UPROBES WITH PERF

22.1. CREATING UPROBES AT THE FUNCTION LEVEL WITH PERF

22.2. CREATING UPROBES ON LINES WITHIN A FUNCTION WITH PERF

22.3. PERF SCRIPT OUTPUT OF DATA RECORDED OVER UPROBES

CHAPTER 23. PROFILING MEMORY ACCESSES WITH PERF MEM

23.1. THE PURPOSE OF PERF MEM

23.2. SAMPLING MEMORY ACCESS WITH PERF MEM

23.3. INTERPRETATION OF PERF MEM REPORT OUTPUT

CHAPTER 24. DETECTING FALSE SHARING

24.1. THE PURPOSE OF PERF C2C

24.2. DETECTING CACHE-LINE CONTENTION WITH PERF C2C

24.3. VISUALIZING A PERF.DATA FILE RECORDED WITH PERF C2C RECORD

24.4. INTERPRETATION OF PERF C2C REPORT OUTPUT

24.5. DETECTING FALSE SHARING WITH PERF C2C

CHAPTER 25. GETTING STARTED WITH FLAMEGRAPHS

25.1. INSTALLING FLAMEGRAPHS

25.2. CREATING FLAMEGRAPHS OVER THE ENTIRE SYSTEM

25.3. CREATING FLAMEGRAPHS OVER SPECIFIC PROCESSES

25.4. INTERPRETING FLAMEGRAPHS

185

187

187

187

188

189

190

190

190

190

191

191

192

193

193

194

195

195

196

197

197

197

198

198

200

200

200

201

203

203

203

204

205

205

205

207

209

209

209

210

212

213

216

216

216

217

218

Table of Contents

5

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

CHAPTER 26. MONITORING PROCESSES FOR PERFORMANCE BOTTLENECKS USING PERF CIRCULAR

BUFFERS

26.1. CIRCULAR BUFFERS AND EVENT-SPECIFIC SNAPSHOTS WITH PERF

26.2. COLLECTING SPECIFIC DATA TO MONITOR FOR PERFORMANCE BOTTLENECKS USING PERF

CIRCULAR BUFFERS

CHAPTER 27. ADDING AND REMOVING TRACEPOINTS FROM A RUNNING PERF COLLECTOR WITHOUT

STOPPING OR RESTARTING PERF

27.1. ADDING TRACEPOINTS TO A RUNNING PERF COLLECTOR WITHOUT STOPPING OR RESTARTING

PERF

27.2. REMOVING TRACEPOINTS FROM A RUNNING PERF COLLECTOR WITHOUT STOPPING OR

RESTARTING PERF

CHAPTER 28. PROFILING MEMORY ALLOCATION WITH NUMASTAT

28.1. DEFAULT NUMASTAT STATISTICS

28.2. VIEWING MEMORY ALLOCATION WITH NUMASTAT

CHAPTER 29. CONFIGURING AN OPERATING SYSTEM TO OPTIMIZE CPU UTILIZATION

29.1. TOOLS FOR MONITORING AND DIAGNOSING PROCESSOR ISSUES

29.2. TYPES OF SYSTEM TOPOLOGY

29.2.1. Displaying system topologies

29.3. CONFIGURING KERNEL TICK TIME

29.4. OVERVIEW OF AN INTERRUPT REQUEST

29.4.1. Balancing interrupts manually

29.4.2. Setting the smp_affinity mask

CHAPTER 30. TUNING SCHEDULING POLICY

30.1. CATEGORIES OF SCHEDULING POLICIES

30.2. STATIC PRIORITY SCHEDULING WITH SCHED_FIFO

30.3. ROUND ROBIN PRIORITY SCHEDULING WITH SCHED_RR

30.4. NORMAL SCHEDULING WITH SCHED_OTHER

30.5. SETTING SCHEDULER POLICIES

30.6. POLICY OPTIONS FOR THE CHRT COMMAND

30.7. CHANGING THE PRIORITY OF SERVICES DURING THE BOOT PROCESS

30.8. PRIORITY MAP

30.9. TUNED CPU-PARTITIONING PROFILE

30.10. USING THE TUNED CPU-PARTITIONING PROFILE FOR LOW-LATENCY TUNING

30.11. CUSTOMIZING THE CPU-PARTITIONING TUNED PROFILE

CHAPTER 31. TUNING THE NETWORK PERFORMANCE

31.1. TUNING NETWORK ADAPTER SETTINGS

31.1.1. Increasing the ring buffer size to reduce a high packet drop rate by using nmcli

31.1.2. Tuning the network device backlog queue to avoid packet drops

31.1.3. Increasing the transmit queue length of a NIC to reduce the number of transmit errors

31.2. TUNING IRQ BALANCING

31.2.1. Interrupts and interrupt handlers

31.2.2. Software interrupt requests

31.2.3. NAPI Polling

31.2.4. The irqbalance service

31.2.5. Increasing the time SoftIRQs can run on the CPU

31.3. IMPROVING THE NETWORK LATENCY

31.3.1. How the CPU power states influence the network latency

31.3.2. C-state settings in the EFI firmware

31.3.3. Disabling C-states by using a custom TuneD profile

31.3.4. Disabling C-states by using a kernel command line option

220

220

220

222

222

223

224

224

224

226

226

227

227

229

231

231

232

234

234

234

235

235

235

236

237

238

239

240

241

243

243

243

244

246

247

247

247

248

248

248

250

250

251

251

252

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

6

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

31.4. IMPROVING THE THROUGHPUT OF LARGE AMOUNTS OF CONTIGUOUS DATA STREAMS

31.4.1. Considerations before configuring jumbo frames

31.4.2. Configuring the MTU in an existing NetworkManager connection profile

31.5. TUNING TCP CONNECTIONS FOR HIGH THROUGHPUT

31.5.1. Testing the TCP throughput using iperf3

31.5.2. The system-wide TCP socket buffer settings

31.5.3. Increasing the system-wide TCP socket buffers

31.5.4. TCP Window Scaling

31.5.5. How TCP SACK reduces the packet drop rate

31.6. TUNING UDP CONNECTIONS

31.6.1. Detecting packet drops

31.6.2. Testing the UDP throughput using iperf3

31.6.3. Impact of the MTU size on UDP traffic throughput

31.6.4. Impact of the CPU speed on UDP traffic throughput

31.6.5. Increasing the system-wide UDP socket buffers

31.7. IDENTIFYING APPLICATION READ SOCKET BUFFER BOTTLENECKS

31.7.1. Identifying receive buffer collapsing and pruning

31.8. TUNING APPLICATIONS WITH A LARGE NUMBER OF INCOMING REQUESTS

31.8.1. Tuning the TCP listen backlog to process a high number of TCP connection attempts

31.9. AVOIDING LISTEN QUEUE LOCK CONTENTION

31.9.1. Avoiding RX queue lock contention: The SO_REUSEPORT and SO_REUSEPORT_BPF socket options

31.9.2. Avoiding TX queue lock contention: Transmit packet steering

31.9.3. Disabling the Generic Receive Offload feature on servers with high UDP traffic

31.10. TUNING THE DEVICE DRIVER AND NIC

31.10.1. Configuring custom NIC driver parameters

31.11. CONFIGURING NETWORK ADAPTER OFFLOAD SETTINGS

31.11.1. Temporarily setting an offload feature

31.11.2. Permanently setting an offload feature

31.12. TUNING INTERRUPT COALESCENCE SETTINGS

31.12.1. Optimizing RHEL for latency or throughput-sensitive services

31.13. BENEFITS OF TCP TIMESTAMPS

31.14. FLOW CONTROL FOR ETHERNET NETWORKS

CHAPTER 32. FACTORS AFFECTING I/O AND FILE SYSTEM PERFORMANCE

32.1. TOOLS FOR MONITORING AND DIAGNOSING I/O AND FILE SYSTEM ISSUES

32.2. AVAILABLE TUNING OPTIONS FOR FORMATTING A FILE SYSTEM

32.3. AVAILABLE TUNING OPTIONS FOR MOUNTING A FILE SYSTEM

32.4. TYPES OF DISCARDING UNUSED BLOCKS

32.5. SOLID-STATE DISKS TUNING CONSIDERATIONS

32.6. GENERIC BLOCK DEVICE TUNING PARAMETERS

CHAPTER 33. USING SYSTEMD TO MANAGE RESOURCES USED BY APPLICATIONS

33.1. ROLE OF SYSTEMD IN RESOURCE MANAGEMENT

33.2. DISTRIBUTION MODELS OF SYSTEM SOURCES

33.3. ALLOCATING SYSTEM RESOURCES USING SYSTEMD

33.4. OVERVIEW OF SYSTEMD HIERARCHY FOR CGROUPS

33.5. LISTING SYSTEMD UNITS

33.6. VIEWING SYSTEMD CGROUPS HIERARCHY

33.7. VIEWING CGROUPS OF PROCESSES

33.8. MONITORING RESOURCE CONSUMPTION

33.9. USING SYSTEMD UNIT FILES TO SET LIMITS FOR APPLICATIONS

33.10. USING SYSTEMCTL COMMAND TO SET LIMITS TO APPLICATIONS

253

254

255

256

256

257

258

260

260

261

261

262

264

265

265

266

267

268

268

270

270

271

273

274

275

276

277

278

279

279

283

283

285

285

287

288

289

289

290

292

292

292

293

293

295

297

298

299

300

301

Table of Contents

7

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

33.11. SETTING GLOBAL DEFAULT CPU AFFINITY THROUGH MANAGER CONFIGURATION

33.12. CONFIGURING NUMA POLICIES USING SYSTEMD

33.13. NUMA POLICY CONFIGURATION OPTIONS FOR SYSTEMD

33.14. CREATING TRANSIENT CGROUPS USING SYSTEMD-RUN COMMAND

33.15. REMOVING TRANSIENT CONTROL GROUPS

CHAPTER 34. UNDERSTANDING CONTROL GROUPS

34.1. INTRODUCING CONTROL GROUPS

34.2. INTRODUCING KERNEL RESOURCE CONTROLLERS

34.3. INTRODUCING NAMESPACES

CHAPTER 35. USING CGROUPFS TO MANUALLY MANAGE CGROUPS

35.1. CREATING CGROUPS AND ENABLING CONTROLLERS IN CGROUPS-V2 FILE SYSTEM

35.2. CONTROLLING DISTRIBUTION OF CPU TIME FOR APPLICATIONS BY ADJUSTING CPU WEIGHT

35.3. MOUNTING CGROUPS-V1

35.4. SETTING CPU LIMITS TO APPLICATIONS USING CGROUPS-V1

CHAPTER 36. ANALYZING SYSTEM PERFORMANCE WITH BPF COMPILER COLLECTION

36.1. INSTALLING THE BCC-TOOLS PACKAGE

36.2. USING SELECTED BCC-TOOLS FOR PERFORMANCE ANALYSES

Using execsnoop to examine the system processes

Using opensnoop to track what files a command opens

Using biotop to examine the I/O operations on the disk

Using xfsslower to expose unexpectedly slow file system operations

CHAPTER 37. CONFIGURING AN OPERATING SYSTEM TO OPTIMIZE MEMORY ACCESS

37.1. TOOLS FOR MONITORING AND DIAGNOSING SYSTEM MEMORY ISSUES

37.2. OVERVIEW OF A SYSTEM’S MEMORY

37.3. VIRTUAL MEMORY PARAMETERS

37.4. FILE SYSTEM PARAMETERS

37.5. KERNEL PARAMETERS

37.6. SETTING MEMORY-RELATED KERNEL PARAMETERS

CHAPTER 38. CONFIGURING HUGE PAGES

38.1. AVAILABLE HUGE PAGE FEATURES

38.2. PARAMETERS FOR RESERVING HUGETLB PAGES AT BOOT TIME

38.3. CONFIGURING HUGETLB AT BOOT TIME

38.4. PARAMETERS FOR RESERVING HUGETLB PAGES AT RUN TIME

38.5. CONFIGURING HUGETLB AT RUN TIME

38.6. ENABLING TRANSPARENT HUGEPAGES

38.7. DISABLING TRANSPARENT HUGEPAGES

38.8. IMPACT OF PAGE SIZE ON TRANSLATION LOOKASIDE BUFFER SIZE

CHAPTER 39. GETTING STARTED WITH SYSTEMTAP

39.1. THE PURPOSE OF SYSTEMTAP

39.2. INSTALLING SYSTEMTAP

39.3. PRIVILEGES TO RUN SYSTEMTAP

39.4. RUNNING SYSTEMTAP SCRIPTS

39.5. USEFUL EXAMPLES OF SYSTEMTAP SCRIPTS

CHAPTER 40. CROSS-INSTRUMENTATION OF SYSTEMTAP

40.1. SYSTEMTAP CROSS-INSTRUMENTATION

40.2. INITIALIZING CROSS-INSTRUMENTATION OF SYSTEMTAP

301

302

303

303

304

306

306

307

308

310

310

312

315

317

321

321

321

321

322

323

324

326

326

326

327

330

330

331

332

332

333

333

335

335

336

337

337

339

339

339

340

341

341

343

343

344

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

8

Table of Contents

9

PROVIDING FEEDBACK ON RED HAT DOCUMENTATION

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

1. Log in to the Jira website.

2. Click Create in the top navigation bar

3. Enter a descriptive title in the Summary field.

4. Enter your suggestion for improvement in the Description field. Include links to the relevant

parts of the documentation.

5. Click Create at the bottom of the dialogue.

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

10

CHAPTER 1. GETTING STARTED WITH TUNED

As a system administrator, you can use the TuneD application to optimize the performance profile of

your system for a variety of use cases.

1.1. THE PURPOSE OF TUNED

TuneD is a service that monitors your system and optimizes the performance under certain workloads.

The core of TuneD are profiles, which tune your system for different use cases.

TuneD is distributed with a number of predefined profiles for use cases such as:

High throughput

Low latency

Saving power

It is possible to modify the rules defined for each profile and customize how to tune a particular device.

When you switch to another profile or deactivate TuneD, all changes made to the system settings by the

previous profile revert back to their original state.

You can also configure TuneD to react to changes in device usage and adjusts settings to improve

performance of active devices and reduce power consumption of inactive devices.

1.2. TUNED PROFILES

A detailed analysis of a system can be very time-consuming. TuneD provides a number of predefined

profiles for typical use cases. You can also create, modify, and delete profiles.

The profiles provided with TuneD are divided into the following categories:

Power-saving profiles

Performance-boosting profiles

The performance-boosting profiles include profiles that focus on the following aspects:

Low latency for storage and network

High throughput for storage and network

Virtual machine performance

Virtualization host performance

Syntax of profile configuration

The tuned.conf file can contain one [main] section and other sections for configuring plug-in instances.

However, all sections are optional.

Lines starting with the hash sign (#) are comments.

Additional resources

tuned.conf(5) man page.

CHAPTER 1. GETTING STARTED WITH TUNED

11

1.3. THE DEFAULT TUNED PROFILE

During the installation, the best profile for your system is selected automatically. Currently, the default

profile is selected according to the following customizable rules:

Environment Default profile Goal

Compute nodes throughput-performance The best throughput performance

Virtual machines virtual-guest The best performance. If you are not

interested in the best performance, you can

change it to the balanced or powersave

profile.

Other cases balanced Balanced performance and power

consumption

Additional resources

tuned.conf(5) man page.

1.4. MERGED TUNED PROFILES

As an experimental feature, it is possible to select more profiles at once. TuneD will try to merge them

during the load.

If there are conflicts, the settings from the last specified profile takes precedence.

Example 1.1. Low power consumption in a virtual guest

The following example optimizes the system to run in a virtual machine for the best performance and

concurrently tunes it for low power consumption, while the low power consumption is the priority:

# tuned-adm profile virtual-guest powersave

WARNING

Merging is done automatically without checking whether the resulting combination

of parameters makes sense. Consequently, the feature might tune some

parameters the opposite way, which might be counterproductive: for example,

setting the disk for high throughput by using the throughput-performance profile

and concurrently setting the disk spindown to the low value by the spindown-disk

profile.

Additional resources

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

12

*tuned-adm man page. * tuned.conf(5) man page.

1.5. THE LOCATION OF TUNED PROFILES

TuneD stores profiles in the following directories:

/usr/lib/tuned/

Distribution-specific profiles are stored in the directory. Each profile has its own directory. The profile

consists of the main configuration file called tuned.conf, and optionally other files, for example

helper scripts.

/etc/tuned/

If you need to customize a profile, copy the profile directory into the directory, which is used for

custom profiles. If there are two profiles of the same name, the custom profile located in /etc/tuned/

is used.

Additional resources

tuned.conf(5) man page.

1.6. TUNED PROFILES DISTRIBUTED WITH RHEL

The following is a list of profiles that are installed with TuneD on Red Hat Enterprise Linux.

NOTE

There might be more product-specific or third-party TuneD profiles available. Such

profiles are usually provided by separate RPM packages.

balanced

The default power-saving profile. It is intended to be a compromise between performance and power

consumption. It uses auto-scaling and auto-tuning whenever possible. The only drawback is the

increased latency. In the current TuneD release, it enables the CPU, disk, audio, and video plugins,

and activates the conservative CPU governor. The radeon_powersave option uses the dpm-

balanced value if it is supported, otherwise it is set to auto.

It changes the energy_performance_preference attribute to the normal energy setting. It also

changes the scaling_governor policy attribute to either the conservative or powersave CPU

governor.

powersave

A profile for maximum power saving performance. It can throttle the performance in order to

minimize the actual power consumption. In the current TuneD release it enables USB autosuspend,

WiFi power saving, and Aggressive Link Power Management (ALPM) power savings for SATA host

adapters. It also schedules multi-core power savings for systems with a low wakeup rate and

activates the ondemand governor. It enables AC97 audio power saving or, depending on your

system, HDA-Intel power savings with a 10 seconds timeout. If your system contains a supported

Radeon graphics card with enabled KMS, the profile configures it to automatic power saving. On

ASUS Eee PCs, a dynamic Super Hybrid Engine is enabled.

It changes the energy_performance_preference attribute to the powersave or power energy

setting. It also changes the scaling_governor policy attribute to either the ondemand or

powersave CPU governor.

NOTE

CHAPTER 1. GETTING STARTED WITH TUNED

13

NOTE

In certain cases, the balanced profile is more efficient compared to the powersave

profile.

Consider there is a defined amount of work that needs to be done, for example a video

file that needs to be transcoded. Your machine might consume less energy if the

transcoding is done on the full power, because the task is finished quickly, the

machine starts to idle, and it can automatically step-down to very efficient power save

modes. On the other hand, if you transcode the file with a throttled machine, the

machine consumes less power during the transcoding, but the process takes longer

and the overall consumed energy can be higher.

That is why the balanced profile can be generally a better option.

throughput-performance

A server profile optimized for high throughput. It disables power savings mechanisms and enables

sysctl settings that improve the throughput performance of the disk and network IO. CPU governor

is set to performance.

It changes the energy_performance_preference and scaling_governor attribute to the

performance profile.

accelerator-performance

The accelerator-performance profile contains the same tuning as the throughput-performance

profile. Additionally, it locks the CPU to low C states so that the latency is less than 100us. This

improves the performance of certain accelerators, such as GPUs.

latency-performance

A server profile optimized for low latency. It disables power savings mechanisms and enables sysctl

settings that improve latency. CPU governor is set to performance and the CPU is locked to the low

C states (by PM QoS).

It changes the energy_performance_preference and scaling_governor attribute to the

performance profile.

network-latency

A profile for low latency network tuning. It is based on the latency-performance profile. It

additionally disables transparent huge pages and NUMA balancing, and tunes several other network-

related sysctl parameters.

It inherits the latency-performance profile which changes the energy_performance_preference

and scaling_governor attribute to the performance profile.

hpc-compute

A profile optimized for high-performance computing. It is based on the latency-performance

profile.

network-throughput

A profile for throughput network tuning. It is based on the throughput-performance profile. It

additionally increases kernel network buffers.

It inherits either the latency-performance or throughput-performance profile, and changes the

energy_performance_preference and scaling_governor attribute to the performance profile.

virtual-guest

A profile designed for Red Hat Enterprise Linux 9 virtual machines and VMWare guests based on the

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

14

A profile designed for Red Hat Enterprise Linux 9 virtual machines and VMWare guests based on the

throughput-performance profile that, among other tasks, decreases virtual memory swappiness and

increases disk readahead values. It does not disable disk barriers.

It inherits the throughput-performance profile and changes the energy_performance_preference

and scaling_governor attribute to the performance profile.

virtual-host

A profile designed for virtual hosts based on the throughput-performance profile that, among other

tasks, decreases virtual memory swappiness, increases disk readahead values, and enables a more

aggressive value of dirty pages writeback.

It inherits the throughput-performance profile and changes the energy_performance_preference

and scaling_governor attribute to the performance profile.

oracle

A profile optimized for Oracle databases loads based on throughput-performance profile. It

additionally disables transparent huge pages and modifies other performance-related kernel

parameters. This profile is provided by the tuned-profiles-oracle package.

desktop

A profile optimized for desktops, based on the balanced profile. It additionally enables scheduler

autogroups for better response of interactive applications.

optimize-serial-console

A profile that tunes down I/O activity to the serial console by reducing the printk value. This should

make the serial console more responsive. This profile is intended to be used as an overlay on other

profiles. For example:

# tuned-adm profile throughput-performance optimize-serial-console

mssql

A profile provided for Microsoft SQL Server. It is based on the throughput-performance profile.

intel-sst

A profile optimized for systems with user-defined Intel Speed Select Technology configurations. This

profile is intended to be used as an overlay on other profiles. For example:

# tuned-adm profile cpu-partitioning intel-sst

1.7. TUNED CPU-PARTITIONING PROFILE

For tuning Red Hat Enterprise Linux 9 for latency-sensitive workloads, Red Hat recommends to use the

cpu-partitioning TuneD profile.

Prior to Red Hat Enterprise Linux 9, the low-latency Red Hat documentation described the numerous

low-level steps needed to achieve low-latency tuning. In Red Hat Enterprise Linux 9, you can perform

low-latency tuning more efficiently by using the cpu-partitioning TuneD profile. This profile is easily

customizable according to the requirements for individual low-latency applications.

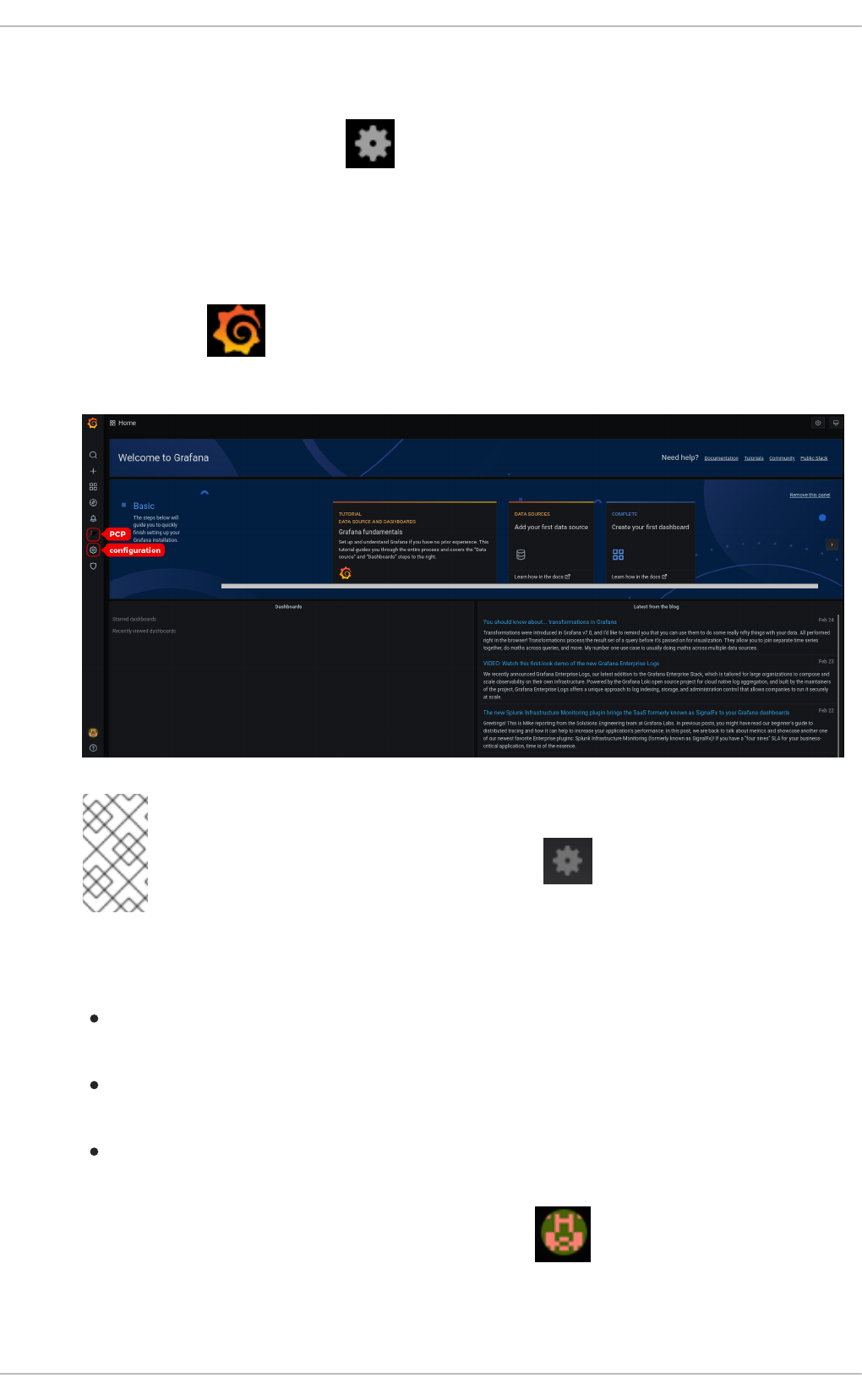

The following figure is an example to demonstrate how to use the cpu-partitioning profile. This

example uses the CPU and node layout.

Figure 1.1. Figure cpu-partitioning

CHAPTER 1. GETTING STARTED WITH TUNED

15

Figure 1.1. Figure cpu-partitioning

You can configure the cpu-partitioning profile in the /etc/tuned/cpu-partitioning-variables.conf file

using the following configuration options:

Isolated CPUs with load balancing

In the cpu-partitioning figure, the blocks numbered from 4 to 23, are the default isolated CPUs. The

kernel scheduler’s process load balancing is enabled on these CPUs. It is designed for low-latency

processes with multiple threads that need the kernel scheduler load balancing.

You can configure the cpu-partitioning profile in the /etc/tuned/cpu-partitioning-variables.conf file

using the isolated_cores=cpu-list option, which lists CPUs to isolate that will use the kernel

scheduler load balancing.

The list of isolated CPUs is comma-separated or you can specify a range using a dash, such as 3-5.

This option is mandatory. Any CPU missing from this list is automatically considered a housekeeping

CPU.

Isolated CPUs without load balancing

In the cpu-partitioning figure, the blocks numbered 2 and 3, are the isolated CPUs that do not

provide any additional kernel scheduler process load balancing.

You can configure the cpu-partitioning profile in the /etc/tuned/cpu-partitioning-variables.conf file

using the no_balance_cores=cpu-list option, which lists CPUs to isolate that will not use the kernel

scheduler load balancing.

Specifying the no_balance_cores option is optional, however any CPUs in this list must be a subset

of the CPUs listed in the isolated_cores list.

Application threads using these CPUs need to be pinned individually to each CPU.

Housekeeping CPUs

Any CPU not isolated in the cpu-partitioning-variables.conf file is automatically considered a

housekeeping CPU. On the housekeeping CPUs, all services, daemons, user processes, movable

kernel threads, interrupt handlers, and kernel timers are permitted to execute.

Additional resources

tuned-profiles-cpu-partitioning(7) man page

1.8. USING THE TUNED CPU-PARTITIONING PROFILE FOR LOW-

LATENCY TUNING

This procedure describes how to tune a system for low-latency using the TuneD’s cpu-partitioning

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

16

This procedure describes how to tune a system for low-latency using the TuneD’s cpu-partitioning

profile. It uses the example of a low-latency application that can use cpu-partitioning and the CPU

layout as mentioned in the cpu-partitioning figure.

The application in this case uses:

One dedicated reader thread that reads data from the network will be pinned to CPU 2.

A large number of threads that process this network data will be pinned to CPUs 4-23.

A dedicated writer thread that writes the processed data to the network will be pinned to CPU

3.

Prerequisites

You have installed the cpu-partitioning TuneD profile by using the dnf install tuned-profiles-

cpu-partitioning command as root.

Procedure

1. Edit /etc/tuned/cpu-partitioning-variables.conf file and add the following information:

# All isolated CPUs:

isolated_cores=2-23

# Isolated CPUs without the kernel’s scheduler load balancing:

no_balance_cores=2,3

2. Set the cpu-partitioning TuneD profile:

# tuned-adm profile cpu-partitioning

3. Reboot

After rebooting, the system is tuned for low-latency, according to the isolation in the cpu-

partitioning figure. The application can use taskset to pin the reader and writer threads to CPUs

2 and 3, and the remaining application threads on CPUs 4-23.

Additional resources

tuned-profiles-cpu-partitioning(7) man page

1.9. CUSTOMIZING THE CPU-PARTITIONING TUNED PROFILE

You can extend the TuneD profile to make additional tuning changes.

For example, the cpu-partitioning profile sets the CPUs to use cstate=1. In order to use the cpu-

partitioning profile but to additionally change the CPU cstate from cstate1 to cstate0, the following

procedure describes a new TuneD profile named my_profile, which inherits the cpu-partitioning profile

and then sets C state-0.

Procedure

1. Create the /etc/tuned/my_profile directory:

# mkdir /etc/tuned/my_profile

CHAPTER 1. GETTING STARTED WITH TUNED

17

2. Create a tuned.conf file in this directory, and add the following content:

# vi /etc/tuned/my_profile/tuned.conf

[main]

summary=Customized tuning on top of cpu-partitioning

include=cpu-partitioning

[cpu]

force_latency=cstate.id:0|1

3. Use the new profile:

# tuned-adm profile my_profile

NOTE

In the shared example, a reboot is not required. However, if the changes in the my_profile

profile require a reboot to take effect, then reboot your machine.

Additional resources

tuned-profiles-cpu-partitioning(7) man page

1.10. REAL-TIME TUNED PROFILES DISTRIBUTED WITH RHEL

Real-time profiles are intended for systems running the real-time kernel. Without a special kernel build,

they do not configure the system to be real-time. On RHEL, the profiles are available from additional

repositories.

The following real-time profiles are available:

realtime

Use on bare-metal real-time systems.

Provided by the tuned-profiles-realtime package, which is available from the RT or NFV repositories.

realtime-virtual-host

Use in a virtualization host configured for real-time.

Provided by the tuned-profiles-nfv-host package, which is available from the NFV repository.

realtime-virtual-guest

Use in a virtualization guest configured for real-time.

Provided by the tuned-profiles-nfv-guest package, which is available from the NFV repository.

1.11. STATIC AND DYNAMIC TUNING IN TUNED

Understanding the difference between the two categories of system tuning that TuneD applies, static

and dynamic, is important when determining which one to use for a given situation or purpose.

Static tuning

Mainly consists of the application of predefined sysctl and sysfs settings and one-shot activation of

several configuration tools such as ethtool.

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

18

Dynamic tuning

Watches how various system components are used throughout the uptime of your system. TuneD

adjusts system settings dynamically based on that monitoring information.

For example, the hard drive is used heavily during startup and login, but is barely used later when the

user might mainly work with applications such as web browsers or email clients. Similarly, the CPU

and network devices are used differently at different times. TuneD monitors the activity of these

components and reacts to the changes in their use.

By default, dynamic tuning is disabled. To enable it, edit the /etc/tuned/tuned-main.conf file and

change the dynamic_tuning option to 1. TuneD then periodically analyzes system statistics and

uses them to update your system tuning settings. To configure the time interval in seconds between

these updates, use the update_interval option.

Currently implemented dynamic tuning algorithms try to balance the performance and powersave,

and are therefore disabled in the performance profiles. Dynamic tuning for individual plug-ins can be

enabled or disabled in the TuneD profiles.

Example 1.2. Static and dynamic tuning on a workstation

On a typical office workstation, the Ethernet network interface is inactive most of the time. Only a

few emails go in and out or some web pages might be loaded.

For those kinds of loads, the network interface does not have to run at full speed all the time, as it

does by default. TuneD has a monitoring and tuning plug-in for network devices that can detect this

low activity and then automatically lower the speed of that interface, typically resulting in a lower

power usage.

If the activity on the interface increases for a longer period of time, for example because a DVD

image is being downloaded or an email with a large attachment is opened, TuneD detects this and

sets the interface speed to maximum to offer the best performance while the activity level is high.

This principle is used for other plug-ins for CPU and disks as well.

1.12. TUNED NO-DAEMON MODE

You can run TuneD in no-daemon mode, which does not require any resident memory. In this mode,

TuneD applies the settings and exits.

By default, no-daemon mode is disabled because a lot of TuneD functionality is missing in this mode,

including:

D-Bus support

Hot-plug support

Rollback support for settings

To enable no-daemon mode, include the following line in the /etc/tuned/tuned-main.conf file:

daemon = 0

1.13. INSTALLING AND ENABLING TUNED

CHAPTER 1. GETTING STARTED WITH TUNED

19

This procedure installs and enables the TuneD application, installs TuneD profiles, and presets a default

TuneD profile for your system.

Procedure

1. Install the TuneD package:

# dnf install tuned

2. Enable and start the TuneD service:

# systemctl enable --now tuned

3. Optionally, install TuneD profiles for real-time systems:

For the TuneD profiles for real-time systems enable rhel-9 repository.

# subscription-manager repos --enable=rhel-9-for-x86_64-nfv-beta-rpms

Install it.

# dnf install tuned-profiles-realtime tuned-profiles-nfv

4. Verify that a TuneD profile is active and applied:

$ tuned-adm active

Current active profile: throughput-performance

NOTE

The active profile TuneD automatically presets differs based on your machine

type and system settings.

$ tuned-adm verify

Verification succeeded, current system settings match the preset profile.

See tuned log file ('/var/log/tuned/tuned.log') for details.

1.14. LISTING AVAILABLE TUNED PROFILES

This procedure lists all TuneD profiles that are currently available on your system.

Procedure

To list all available TuneD profiles on your system, use:

$ tuned-adm list

Available profiles:

- accelerator-performance - Throughput performance based tuning with disabled higher

latency STOP states

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

20

- balanced - General non-specialized TuneD profile

- desktop - Optimize for the desktop use-case

- latency-performance - Optimize for deterministic performance at the cost of increased

power consumption

- network-latency - Optimize for deterministic performance at the cost of increased

power consumption, focused on low latency network performance

- network-throughput - Optimize for streaming network throughput, generally only

necessary on older CPUs or 40G+ networks

- powersave - Optimize for low power consumption

- throughput-performance - Broadly applicable tuning that provides excellent performance

across a variety of common server workloads

- virtual-guest - Optimize for running inside a virtual guest

- virtual-host - Optimize for running KVM guests

Current active profile: balanced

To display only the currently active profile, use:

$ tuned-adm active

Current active profile: throughput-performance

Additional resources

tuned-adm(8) man page.

1.15. SETTING A TUNED PROFILE

This procedure activates a selected TuneD profile on your system.

Prerequisites

The TuneD service is running. See Installing and Enabling TuneD for details.

Procedure

1. Optionally, you can let TuneD recommend the most suitable profile for your system:

# tuned-adm recommend

throughput-performance

2. Activate a profile:

# tuned-adm profile selected-profile

Alternatively, you can activate a combination of multiple profiles:

# tuned-adm profile selected-profile1 selected-profile2

Example 1.3. A virtual machine optimized for low power consumption

The following example optimizes the system to run in a virtual machine with the best

CHAPTER 1. GETTING STARTED WITH TUNED

21

The following example optimizes the system to run in a virtual machine with the best

performance and concurrently tunes it for low power consumption, while the low power

consumption is the priority:

# tuned-adm profile virtual-guest powersave

3. View the current active TuneD profile on your system:

# tuned-adm active

Current active profile: selected-profile

4. Reboot the system:

# reboot

Verification

Verify that the TuneD profile is active and applied:

$ tuned-adm verify

Verification succeeded, current system settings match the preset profile.

See tuned log file ('/var/log/tuned/tuned.log') for details.

Additional resources

tuned-adm(8) man page

1.16. USING THE TUNED D-BUS INTERFACE

You can directly communicate with TuneD at runtime through the TuneD D-Bus interface to control a

variety of TuneD services.

You can use the busctl or dbus-send commands to access the D-Bus API.

NOTE

Although you can use either the busctl or dbus-send command, the busctl command is

a part of systemd and, therefore, present on most hosts already.

1.16.1. Using the TuneD D-Bus interface to show available TuneD D-Bus API methods

You can see the D-Bus API methods available to use with TuneD by using the TuneD D-Bus interface.

Prerequisites

The TuneD service is running. See Installing and Enabling TuneD for details.

Procedure

Red Hat Enterprise Linux 9.0 Monitoring and managing system status and performance

22

To see the available TuneD API methods, run:

$ busctl introspect com.redhat.tuned /Tuned com.redhat.tuned.control

The output should look similar to the following:

NAME TYPE SIGNATURE RESULT/VALUE FLAGS

.active_profile method - s -

.auto_profile method - (bs) -

.disable method - b -

.get_all_plugins method - a{sa{ss}} -

.get_plugin_documentation method s s -

.get_plugin_hints method s a{ss} -

.instance_acquire_devices method ss (bs) -

.is_running method - b -

.log_capture_finish method s s -

.log_capture_start method ii s -

.post_loaded_profile method - s -

.profile_info method s (bsss) -

.profile_mode method - (ss) -

.profiles method - as -

.profiles2 method - a(ss) -

.recommend_profile method - s -

.register_socket_signal_path method s b -

.reload method - b -

.start method - b -

.stop method - b -

.switch_profile method s (bs) -

.verify_profile method - b -

.verify_profile_ignore_missing method - b -

.profile_changed signal sbs - -

You can find descriptions of the different available methods in the TuneD upstream repository .

1.16.2. Using the TuneD D-Bus interface to change the active TuneD profile

You can replace the active TuneD profile with your desired TuneD profile by using the TuneD D-Bus

interface.

Prerequisites

The TuneD service is running. See Installing and Enabling TuneD for details.

Procedure

To change the active TuneD profile, run:

$ busctl call com.redhat.tuned /Tuned com.redhat.tuned.control switch_profile s profile

(bs) true "OK"

Replace profile with the name of your desired profile.

Verification

CHAPTER 1. GETTING STARTED WITH TUNED