Amazon Athena

User Guide

Amazon Athena: User Guide

Copyright © 2018 Amazon Web Services, Inc. and/or its affiliates. All rights reserved.

Amazon Athena User Guide

Amazon's trademarks and trade dress may not be used in connection with any product or service that is not Amazon's, in any manner

that is likely to cause confusion among customers, or in any manner that disparages or discredits Amazon. All other trademarks not

owned by Amazon are the property of their respective owners, who may or may not be affiliated with, connected to, or sponsored by

Amazon.

Amazon Athena User Guide

Table of Contents

What is Amazon Athena? .................................................................................................................... 1

When should I use Athena? ......................................................................................................... 1

Accessing Athena ....................................................................................................................... 1

Understanding Tables, Databases, and the Data Catalog ................................................................. 2

Release Notes .................................................................................................................................... 4

February 2, 2018 ....................................................................................................................... 4

January 19, 2018 ....................................................................................................................... 4

November 13, 2017 ................................................................................................................... 5

November 1, 2017 ..................................................................................................................... 5

October 19, 2017 ....................................................................................................................... 5

October 3, 2017 ........................................................................................................................ 6

September 25, 2017 ................................................................................................................... 6

August 14, 2017 ........................................................................................................................ 6

August 4, 2017 .......................................................................................................................... 6

June 22, 2017 ........................................................................................................................... 6

June 8, 2017 ............................................................................................................................. 6

May 19, 2017 ............................................................................................................................ 6

Improvements .................................................................................................................... 7

Bug Fixes .......................................................................................................................... 7

April 4, 2017 ............................................................................................................................. 7

Features ............................................................................................................................ 7

Improvements .................................................................................................................... 7

Bug Fixes .......................................................................................................................... 7

March 24, 2017 ......................................................................................................................... 8

Features ............................................................................................................................ 8

Improvements .................................................................................................................... 9

Bug Fixes .......................................................................................................................... 9

February 20, 2017 ...................................................................................................................... 9

Features ............................................................................................................................ 9

Improvements .................................................................................................................. 11

Setting Up ....................................................................................................................................... 12

Sign Up for AWS ...................................................................................................................... 12

To create an AWS account ................................................................................................. 12

Create an IAM User .................................................................................................................. 12

To create a group for administrators ................................................................................... 12

To create an IAM user for yourself, add the user to the administrators group, and create a

password for the user ....................................................................................................... 13

Attach Managed Policies for Using Athena .................................................................................. 13

Getting Started ................................................................................................................................ 14

Prerequisites ............................................................................................................................ 14

Step 1: Create a Database ......................................................................................................... 14

Step 2: Create a Table .............................................................................................................. 15

Step 3: Query Data .................................................................................................................. 16

Integration with AWS Glue ................................................................................................................ 18

Upgrading to the AWS Glue Data Catalog Step-by-Step ................................................................ 19

Step 1 - Allow a User to Perform the Upgrade ..................................................................... 19

Step 2 - Update Customer-Managed/Inline Policies Associated with Athena Users ..................... 19

Step 3 - Choose Upgrade in the Athena Console .................................................................. 20

FAQ: Upgrading to the AWS Glue Data Catalog ............................................................................ 21

Why should I upgrade to the AWS Glue Data Catalog? .......................................................... 21

Are there separate charges for AWS Glue? ........................................................................... 22

Upgrade process FAQ ........................................................................................................ 22

Best Practices When Using Athena with AWS Glue ........................................................................ 23

Database, Table, and Column Names .................................................................................. 24

iii

Amazon Athena User Guide

Using AWS Glue Crawlers .................................................................................................. 24

Working with CSV Files ..................................................................................................... 28

Using AWS Glue Jobs for ETL with Athena ........................................................................... 30

Connecting to Amazon Athena with ODBC and JDBC Drivers .................................................................. 33

Using Athena with the JDBC Driver ............................................................................................ 33

Download the JDBC Driver ................................................................................................ 33

Specify the Connection String ............................................................................................ 33

Specify the JDBC Driver Class Name ................................................................................... 33

Provide the JDBC Driver Credentials ................................................................................... 33

Configure the JDBC Driver Options ..................................................................................... 34

Connecting to Amazon Athena with ODBC .................................................................................. 35

Amazon Athena ODBC Driver License Agreement ................................................................. 35

Windows ......................................................................................................................... 35

Linux .............................................................................................................................. 35

OSX ................................................................................................................................ 36

ODBC Driver Connection String .......................................................................................... 36

Documentation ................................................................................................................ 36

Security ........................................................................................................................................... 37

Setting User and Amazon S3 Bucket Permissions .......................................................................... 37

IAM Policies for User Access .............................................................................................. 37

AmazonAthenaFullAccess Managed Policy ........................................................................... 37

AWSQuicksightAthenaAccess Managed Policy ....................................................................... 39

Access through JDBC Connections ...................................................................................... 40

Amazon S3 Permissions .................................................................................................... 40

Cross-account Permissions ................................................................................................. 40

Configuring Encryption Options ................................................................................................. 41

Permissions for Encrypting and Decrypting Data .................................................................. 42

Creating Tables Based on Encrypted Datasets in Amazon S3 .................................................. 42

Encrypting Query Results Stored in Amazon S3 .................................................................... 44

Encrypting Query Results stored in Amazon S3 Using the JDBC Driver ..................................... 45

Working with Source Data ................................................................................................................. 46

Tables and Databases Creation Process in Athena ......................................................................... 46

Requirements for Tables in Athena and Data in Amazon S3 ................................................... 46

Functions Supported ......................................................................................................... 47

CREATE TABLE AS Type Statements Are Not Supported ......................................................... 47

Transactional Data Transformations Are Not Supported ......................................................... 47

Operations That Change Table States Are ACID .................................................................... 47

All Tables Are EXTERNAL ................................................................................................... 47

UDF and UDAF Are Not Supported ..................................................................................... 47

To create a table using the AWS Glue Data Catalog .............................................................. 47

To create a table using the wizard ...................................................................................... 48

To create a database using Hive DDL .................................................................................. 48

To create a table using Hive DDL ....................................................................................... 49

Names for Tables, Databases, and Columns ................................................................................. 50

Table names and table column names in Athena must be lowercase ........................................ 50

Athena table, database, and column names allow only underscore special characters ................. 50

Names that begin with an underscore ................................................................................. 50

Table names that include numbers ..................................................................................... 50

Table Location in Amazon S3 ..................................................................................................... 51

Partitioning Data ...................................................................................................................... 51

Scenario 1: Data already partitioned and stored on S3 in hive format ...................................... 52

Scenario 2: Data is not partitioned ..................................................................................... 53

Converting to Columnar Formats ............................................................................................... 55

Overview ......................................................................................................................... 55

Before you begin .............................................................................................................. 14

Example: Converting data to Parquet using an EMR cluster .................................................... 57

Querying Data in Amazon Athena Tables ............................................................................................ 59

iv

Amazon Athena User Guide

Query Results .......................................................................................................................... 59

Saving Query Results ........................................................................................................ 59

Viewing Query History .............................................................................................................. 60

Viewing Query History ...................................................................................................... 60

Querying Arrays ....................................................................................................................... 61

Creating Arrays ................................................................................................................ 61

Concatenating Arrays ........................................................................................................ 62

Converting Array Data Types ............................................................................................. 63

Finding Lengths ............................................................................................................... 64

Accessing Array Elements .................................................................................................. 64

Flattening Nested Arrays ................................................................................................... 65

Creating Arrays from Subqueries ........................................................................................ 67

Filtering Arrays ................................................................................................................ 68

Sorting Arrays .................................................................................................................. 69

Using Aggregation Functions with Arrays ............................................................................ 69

Converting Arrays to Strings .............................................................................................. 70

Querying Arrays with ROWS and STRUCTS .................................................................................. 70

Creating a ROW ................................................................................................................. 70

Changing Field Names in Arrays Using CAST ........................................................................ 71

Filtering Arrays Using the . Notation .................................................................................. 71

Filtering Arrays with Nested Values .................................................................................... 72

Filtering Arrays Using UNNEST ........................................................................................... 73

Finding Keywords in Arrays ................................................................................................ 73

Ordering Values in Arrays .................................................................................................. 74

Querying Arrays with Maps ....................................................................................................... 75

Examples ......................................................................................................................... 61

Querying JSON ........................................................................................................................ 76

Tips for Parsing Arrays with JSON ...................................................................................... 76

Extracting Data from JSON ................................................................................................ 78

Searching for Values ......................................................................................................... 80

Obtaining Length and Size of JSON Arrays .......................................................................... 81

Querying Geospatial Data ................................................................................................................. 83

What is a Geospatial Query? ...................................................................................................... 83

Input Data Formats and Geometry Data Types ............................................................................. 83

Input Data Formats .......................................................................................................... 83

Geometry Data Types ....................................................................................................... 84

List of Supported Geospatial Functions ....................................................................................... 84

Before You Begin ............................................................................................................. 84

Constructor Functions ....................................................................................................... 85

Geospatial Relationship Functions ...................................................................................... 86

Operation Functions ......................................................................................................... 88

Accessor Functions ........................................................................................................... 89

Examples: Geospatial Queries .................................................................................................... 92

Querying AWS Service Logs ............................................................................................................... 94

Querying AWS CloudTrail Logs ................................................................................................... 94

Creating the Table for CloudTrail Logs ................................................................................ 95

Tips for Querying CloudTrail Logs ...................................................................................... 96

Querying Amazon CloudFront Logs ............................................................................................ 97

Creating the Table for CloudFront Logs ............................................................................... 97

Example Query for CloudFront logs .................................................................................... 98

Querying Classic Load Balancer Logs .......................................................................................... 98

Creating the Table for Elastic Load Balancing Logs ............................................................... 98

Example Queries for Elastic Load Balancing Logs .................................................................. 99

Querying Application Load Balancer Logs .................................................................................. 100

Creating the Table for ALB Logs ....................................................................................... 100

Example Queries for ALB logs .......................................................................................... 101

Querying Amazon VPC Flow Logs ............................................................................................. 101

v

Amazon Athena User Guide

Creating the Table for VPC Flow Logs ............................................................................... 101

Example Queries for Amazon VPC Flow Logs ..................................................................... 102

Monitoring Logs and Troubleshooting ............................................................................................... 103

Logging Amazon Athena API Calls with AWS CloudTrail ............................................................... 103

Athena Information in CloudTrail ...................................................................................... 103

Understanding Athena Log File Entries .............................................................................. 104

Troubleshooting ..................................................................................................................... 105

SerDe Reference ............................................................................................................................. 107

Using a SerDe ........................................................................................................................ 107

To Use a SerDe in Queries ............................................................................................... 107

Supported SerDes and Data Formats ........................................................................................ 108

Avro SerDe .................................................................................................................... 109

RegexSerDe for Processing Apache Web Server Logs ........................................................... 111

CloudTrail SerDe ............................................................................................................. 112

OpenCSVSerDe for Processing CSV ................................................................................... 114

Grok SerDe .................................................................................................................... 115

JSON SerDe Libraries ...................................................................................................... 117

LazySimpleSerDe for CSV, TSV, and Custom-Delimited Files ................................................. 120

ORC SerDe ..................................................................................................................... 125

Parquet SerDe ................................................................................................................ 127

Compression Formats .............................................................................................................. 130

DDL and SQL Reference .................................................................................................................. 131

Data Types ............................................................................................................................ 131

List of Supported Data Types in Athena ............................................................................ 131

DDL Statements ..................................................................................................................... 132

ALTER DATABASE SET DBPROPERTIES ............................................................................... 132

ALTER TABLE ADD PARTITION .......................................................................................... 133

ALTER TABLE DROP PARTITION ........................................................................................ 134

ALTER TABLE RENAME PARTITION .................................................................................... 134

ALTER TABLE SET LOCATION ........................................................................................... 135

ALTER TABLE SET TBLPROPERTIES ................................................................................... 135

CREATE DATABASE .......................................................................................................... 136

CREATE TABLE ............................................................................................................... 136

DESCRIBE TABLE ............................................................................................................ 139

DROP DATABASE ............................................................................................................ 140

DROP TABLE .................................................................................................................. 140

MSCK REPAIR TABLE ....................................................................................................... 141

SHOW COLUMNS ........................................................................................................... 141

SHOW CREATE TABLE ..................................................................................................... 142

SHOW DATABASES ......................................................................................................... 142

SHOW PARTITIONS ......................................................................................................... 142

SHOW TABLES ............................................................................................................... 143

SHOW TBLPROPERTIES ................................................................................................... 143

SQL Queries, Functions, and Operators ..................................................................................... 144

SELECT .......................................................................................................................... 144

Unsupported DDL ................................................................................................................... 148

Limitations ............................................................................................................................. 149

Code Samples and Service Limits ..................................................................................................... 150

Code Samples ........................................................................................................................ 150

Create a Client to Access Athena ...................................................................................... 150

Start Query Execution ..................................................................................................... 151

Stop Query Execution ..................................................................................................... 154

List Query Executions ...................................................................................................... 155

Create a Named Query .................................................................................................... 156

Delete a Named Query .................................................................................................... 156

List Named Queries ........................................................................................................ 157

Service Limits ......................................................................................................................... 158

vi

Amazon Athena User Guide

When should I use Athena?

What is Amazon Athena?

Amazon Athena is an interactive query service that makes it easy to analyze data directly in Amazon

Simple Storage Service (Amazon S3) using standard SQL. With a few actions in the AWS Management

Console, you can point Athena at your data stored in Amazon S3 and begin using standard SQL to run

ad-hoc queries and get results in seconds.

Athena is serverless, so there is no infrastructure to set up or manage, and you pay only for the queries

you run. Athena scales automatically—executing queries in parallel—so results are fast, even with large

datasets and complex queries.

When should I use Athena?

Athena helps you analyze unstructured, semi-structured, and structured data stored in Amazon S3.

Examples include CSV, JSON, or columnar data formats such as Apache Parquet and Apache ORC. You

can use Athena to run ad-hoc queries using ANSI SQL, without the need to aggregate or load the data

into Athena.

Athena integrates with the AWS Glue Data Catalog, which offers a persistent metadata store for your

data in Amazon S3. This allows you to create tables and query data in Athena based on a central

metadata store available throughout your AWS account and integrated with the ETL and data discovery

features of AWS Glue. For more information, see Integration with AWS Glue (p. 18) and What is AWS

Glue in the AWS Glue Developer Guide.

Athena integrates with Amazon QuickSight for easy data visualization.

You can use Athena to generate reports or to explore data with business intelligence tools or SQL

clients connected with a JDBC or an ODBC driver. For more information, see What is Amazon QuickSight

in the Amazon QuickSight User Guide and Connecting to Amazon Athena with ODBC and JDBC

Drivers (p. 33).

You can create named queries with AWS CloudFormation and run them in Athena. Named queries

allow you to map a query name to a query and then call the query multiple times referencing it

by its name. For information, see CreateNamedQuery in the Amazon Athena API Reference, and

AWS::Athena::NamedQuery in the AWS CloudFormation User Guide.

Accessing Athena

You can access Athena using the AWS Management Console, through a JDBC connection, using the

Athena API, or using the Athena CLI.

• To get started with the console, see Getting Started (p. 14).

• To learn how to use JDBC, see Connecting to Amazon Athena with JDBC (p. 33).

• To use the Athena API, see the Amazon Athena API Reference.

• To use the CLI, install the AWS CLI and then type aws athena help from the command line to see

available commands. For information about available commands, see the AWS Athena command line

reference.

1

Amazon Athena User Guide

Understanding Tables, Databases, and the Data Catalog

Understanding Tables, Databases, and the Data

Catalog

In Athena, tables and databases are containers for the metadata definitions that define a schema for

underlying source data. For each dataset, a table needs to exist in Athena. The metadata in the table

tells Athena where the data is located in Amazon S3, and specifies the structure of the data, for example,

column names, data types, and the name of the table. Databases are a logical grouping of tables, and

also hold only metadata and schema information for a dataset.

For each dataset that you'd like to query, Athena must have an underlying table it will use for obtaining

and returning query results. Therefore, before querying data, a table must be registered in Athena. The

registration occurs when you either create tables automatically or manually.

Regardless of how the tables are created, the tables creation process registers the dataset with Athena.

This registration occurs either in the AWS Glue Data Catalog, or in the internal Athena data catalog and

enables Athena to run queries on the data.

• To create a table automatically, use an AWS Glue crawler from within Athena. For more information

about AWS Glue and crawlers, see Integration with AWS Glue (p. 18). When AWS Glue creates a

table, it registers it in its own AWS Glue Data Catalog. Athena uses the AWS Glue Data Catalog to store

and retrieve this metadata, using it when you run queries to analyze the underlying dataset.

The AWS Glue Data Catalog is accessible throughout your AWS account. Other AWS services can share

the AWS Glue Data Catalog, so you can see databases and tables created throughout your organization

using Athena and vice versa. In addition, AWS Glue lets you automatically discover data schema and

extract, transform, and load (ETL) data.

Note

You use the internal Athena data catalog in regions where AWS Glue is not available and where

the AWS Glue Data Catalog cannot be used.

• To create a table manually:

• Use the Athena console to run the Create Table Wizard.

• Use the Athena console to write Hive DDL statements in the Query Editor.

• Use the Athena API or CLI to execute a SQL query string with DDL statements.

• Use the Athena JDBC or ODBC driver.

When you create tables and databases manually, Athena uses HiveQL data definition language (DDL)

statements such as CREATE TABLE, CREATE DATABASE, and DROP TABLE under the hood to create

tables and databases in the AWS Glue Data Catalog, or in its internal data catalog in those regions where

AWS Glue is not available.

Note

If you have tables in Athena created before August 14, 2017, they were created in an Athena-

managed data catalog that exists side-by-side with the AWS Glue Data Catalog until you

choose to update. For more information, see Upgrading to the AWS Glue Data Catalog Step-by-

Step (p. 19).

When you query an existing table, under the hood, Amazon Athena uses Presto, a distributed SQL

engine. We have examples with sample data within Athena to show you how to create a table and then

issue a query against it using Athena. Athena also has a tutorial in the console that helps you get started

creating a table based on data that is stored in Amazon S3.

• For a step-by-step tutorial on creating a table and write queries in the Athena Query Editor, see

Getting Started (p. 14).

2

Amazon Athena User Guide

February 2, 2018

Release Notes

Describes Amazon Athena features, improvements, and bug fixes by release date.

Contents

• February 2, 2018 (p. 4)

• January 19, 2018 (p. 4)

• November 13, 2017 (p. 5)

• November 1, 2017 (p. 5)

• October 19, 2017 (p. 5)

• October 3, 2017 (p. 6)

• September 25, 2017 (p. 6)

• August 14, 2017 (p. 6)

• August 4, 2017 (p. 6)

• June 22, 2017 (p. 6)

• June 8, 2017 (p. 6)

• May 19, 2017 (p. 6)

• Improvements (p. 7)

• Bug Fixes (p. 7)

• April 4, 2017 (p. 7)

• Features (p. 7)

• Improvements (p. 7)

• Bug Fixes (p. 7)

• March 24, 2017 (p. 8)

• Features (p. 8)

• Improvements (p. 9)

• Bug Fixes (p. 9)

• February 20, 2017 (p. 9)

• Features (p. 9)

• Improvements (p. 11)

February 2, 2018

Published on 2018-02-12

Added an ability to securely offload intermediate data to disk for memory-intensive queries that use the

GROUP BY clause. This improves the reliability of such queries, preventing "Query resource exhausted"

errors.

January 19, 2018

Published on 2018-01-19

Athena uses Presto, an open-source distributed query engine, to run queries.

With Athena, there are no versions to manage. We have transparently upgraded the underlying engine in

Athena to a version based on Presto version 0.172. No action is required on your end.

4

Amazon Athena User Guide

November 13, 2017

With the upgrade, you can now use Presto 0.172 Functions and Operators, including Presto 0.172

Lambda Expressions in Athena.

Major updates for this release, including the community-contributed fixes, include:

• Support for ignoring headers. You can use the skip.header.line.count property when defining

tables, to allow Athena to ignore headers.

• Support for the CHAR(n) data type in STRING functions. The range for CHAR(n) is [1.255], while

the range for VARCHAR(n) is [1,65535].

• Support for correlated subqueries.

• Support for Presto Lambda expressions and functions.

• Improved performance of the DECIMAL type and operators.

• Support for filtered aggregations, such as SELECT sum(col_name) FILTER, where id > 0.

• Push-down predicates for the DECIMAL, TINYINT, SMALLINT, and REAL data types.

• Support for quantified comparison predicates: ALL, ANY, and SOME.

• Added functions: arrays_overlap(), array_except(), levenshtein_distance(),

codepoint(), skewness(), kurtosis(), and typeof().

• Added a variant of the from_unixtime() function that takes a timezone argument.

• Added the bitwise_and_agg() and bitwise_or_agg() aggregation functions.

• Added the xxhash64() and to_big_endian_64() functions.

• Added support for escaping double quotes or backslashes using a backslash with a JSON path

subscript to the json_extract() and json_extract_scalar() functions. This changes the

semantics of any invocation using a backslash, as backslashes were previously treated as normal

characters.

For a complete list of functions and operators, see SQL Queries, Functions, and Operators (p. 144) in

this guide, and Presto 0.172 Functions.

Athena does not support all of Presto's features. For more information, see Limitations (p. 149).

November 13, 2017

Published on 2017-11-13

Added support for connecting Athena to the ODBC Driver. For information, see Connecting to Amazon

Athena with ODBC (p. 35).

November 1, 2017

Published on 2017-11-01

Added support for querying geospatial data, and for Asia Pacific (Seoul), Asia Pacific (Mumbai), and

EU (London) regions. For information, see Querying Geospatial Data (p. 83) and AWS Regions and

Endpoints.

October 19, 2017

Published on 2017-10-19

Added support for EU (Frankfurt). For a list of supported regions, see AWS Regions and Endpoints.

5

Amazon Athena User Guide

October 3, 2017

October 3, 2017

Published on 2017-10-03

Create named Athena queries with CloudFormation. For more information, see

AWS::Athena::NamedQuery in the AWS CloudFormation User Guide.

September 25, 2017

Published on 2017-09-25

Added support for Asia Pacific (Sydney). For a list of supported regions, see AWS Regions and Endpoints.

August 14, 2017

Published on 2017-08-14

Added integration with the AWS Glue Data Catalog and a migration wizard for updating from the Athena

managed data catalog to the AWS Glue Data Catalog. For more information, see Integration with AWS

Glue (p. 18).

August 4, 2017

Published on 2017-08-04

Added support for Grok SerDe, which provides easier pattern matching for records in unstructured text

files such as logs. For more information, see Grok SerDe (p. 115). Added keyboard shortcuts to scroll

through query history using the console (CTRL +

⇧/⇩

using Windows, CMD +

⇧/⇩

using Mac).

June 22, 2017

Published on 2017-06-22

Added support for Asia Pacific (Tokyo) and Asia Pacific (Singapore). For a list of supported regions, see

AWS Regions and Endpoints.

June 8, 2017

Published on 2017-06-08

Added support for EU (Ireland). For more information, see AWS Regions and Endpoints.

May 19, 2017

Published on 2017-05-19

Added an Amazon Athena API and AWS CLI support for Athena; updated JDBC driver to version 1.1.0;

fixed various issues.

6

Amazon Athena User Guide

Improvements

• Amazon Athena enables application programming for Athena. For more information, see Amazon

Athena API Reference. The latest AWS SDKs include support for the Athena API. For links to

documentation and downloads, see the SDKs section in Tools for Amazon Web Services.

• The AWS CLI includes new commands for Athena. For more information, see the AWS CLI Reference for

Athena.

• A new JDBC driver 1.1.0 is available, which supports the new Athena API as well as the latest features

and bug fixes. Download the driver at https://s3.amazonaws.com/athena-downloads/drivers/

AthenaJDBC41-1.1.0.jar. We recommend upgrading to the latest Athena JDBC driver; however, you

may still use the earlier driver version. Earlier driver versions do not support the Athena API. For more

information, see Using Athena with the JDBC Driver (p. 33).

• Actions specific to policy statements in earlier versions of Athena have been deprecated. If you

upgrade to JDBC driver version 1.1.0 and have customer-managed or inline IAM policies attached to

JDBC users, you must update the IAM policies. In contrast, earlier versions of the JDBC driver do not

support the Athena API, so you can specify only deprecated actions in policies attached to earlier

version JDBC users. For this reason, you shouldn't need to update customer-managed or inline IAM

policies.

• These policy-specific actions were used in Athena before the release of the Athena API. Use these

deprecated actions in policies only with JDBC drivers earlier than version 1.1.0. If you are upgrading

the JDBC driver, replace policy statements that allow or deny deprecated actions with the appropriate

API actions as listed or errors will occur:

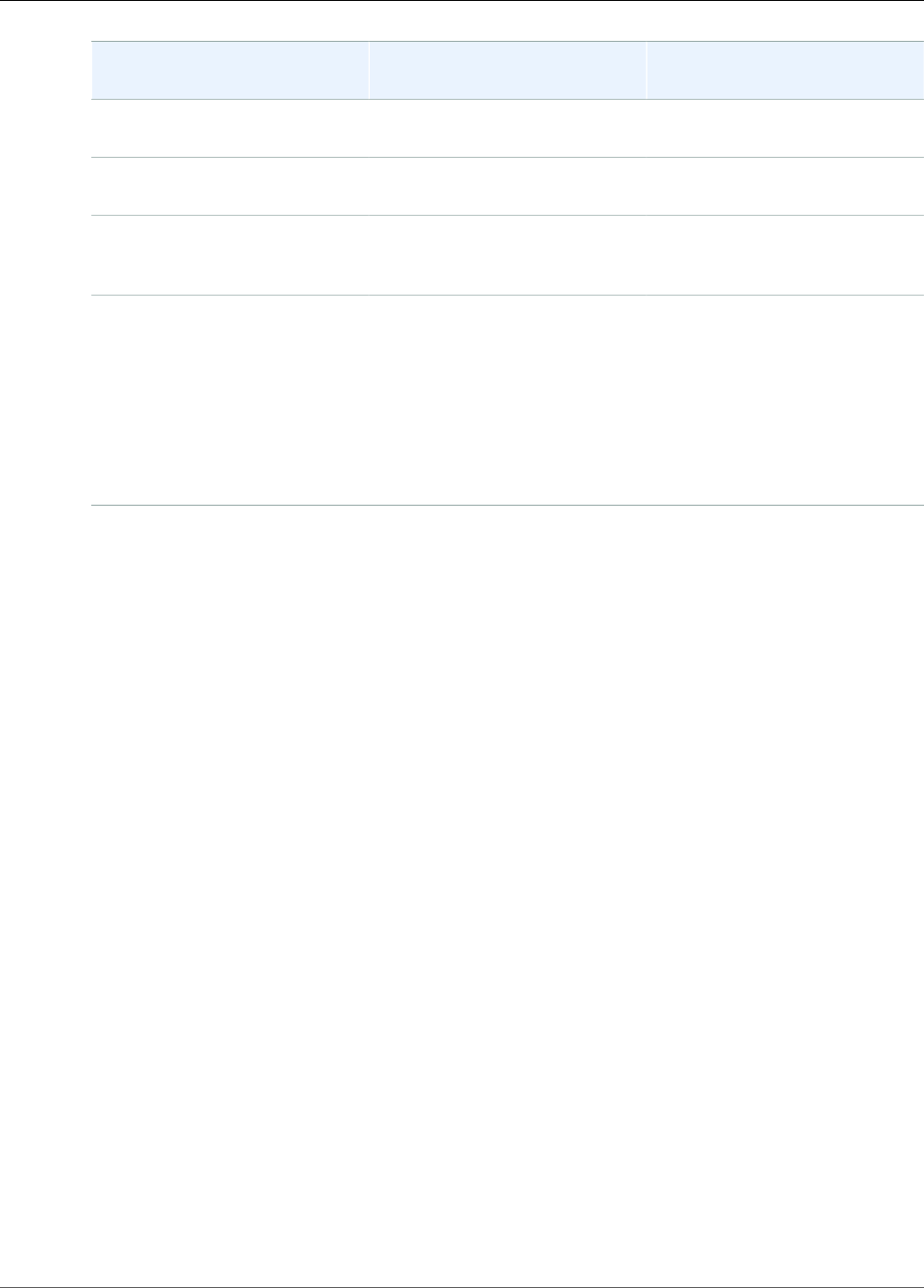

Deprecated Policy-Specific Action Corresponding Athena API Action

athena:RunQuery athena:StartQueryExecution

athena:CancelQueryExecution athena:StopQueryExecution

athena:GetQueryExecutions athena:ListQueryExecutions

Improvements

• Increased the query string length limit to 256 KB.

Bug Fixes

• Fixed an issue that caused query results to look malformed when scrolling through results in the

console.

• Fixed an issue where a \u0000 character string in Amazon S3 data files would cause errors.

• Fixed an issue that caused requests to cancel a query made through the JDBC driver to fail.

• Fixed an issue that caused the AWS CloudTrail SerDe to fail with Amazon S3 data in US East (Ohio).

• Fixed an issue that caused DROP TABLE to fail on a partitioned table.

April 4, 2017

Published on 2017-04-04

7

Amazon Athena User Guide

Features

Added support for Amazon S3 data encryption and released JDBC driver update (version 1.0.1) with

encryption support, improvements, and bug fixes.

Features

• Added the following encryption features:

• Support for querying encrypted data in Amazon S3.

• Support for encrypting Athena query results.

• A new version of the driver supports new encryption features, adds improvements, and fixes issues.

• Added the ability to add, replace, and change columns using ALTER TABLE. For more information, see

Alter Column in the Hive documentation.

• Added support for querying LZO-compressed data.

For more information, see Configuring Encryption Options (p. 41).

Improvements

• Better JDBC query performance with page-size improvements, returning 1,000 rows instead of 100.

• Added ability to cancel a query using the JDBC driver interface.

• Added ability to specify JDBC options in the JDBC connection URL. For more information, see Using

Athena with the JDBC Driver (p. 33).

• Added PROXY setting in the driver, which can now be set using ClientConfiguration in the AWS SDK for

Java.

Bug Fixes

Fixed the following bugs:

• Throttling errors would occur when multiple queries were issued using the JDBC driver interface.

• The JDBC driver would abort when projecting a decimal data type.

• The JDBC driver would return every data type as a string, regardless of how the data type

was defined in the table. For example, selecting a column defined as an INT data type using

resultSet.GetObject() would return a STRING data type instead of INT.

• The JDBC driver would verify credentials at the time a connection was made, rather than at the time a

query would run.

• Queries made through the JDBC driver would fail when a schema was specified along with the URL.

March 24, 2017

Published on 2017-03-24

Added the AWS CloudTrail SerDe, improved performance, fixed partition issues.

Features

• Added the AWS CloudTrail SerDe. For more information, see CloudTrail SerDe (p. 112). For detailed

usage examples, see the AWS Big Data Blog post, Analyze Security, Compliance, and Operational

Activity Using AWS CloudTrail and Amazon Athena.

8

Amazon Athena User Guide

Improvements

Improvements

• Improved performance when scanning a large number of partitions.

• Improved performance on MSCK Repair Table operation.

• Added ability to query Amazon S3 data stored in regions other than your primary region. Standard

inter-region data transfer rates for Amazon S3 apply in addition to standard Athena charges.

Bug Fixes

• Fixed a bug where a "table not found error" might occur if no partitions are loaded.

• Fixed a bug to avoid throwing an exception with ALTER TABLE ADD PARTITION IF NOT EXISTS

queries.

• Fixed a bug in DROP PARTITIONS.

February 20, 2017

Published on 2017-02-20

Added support for AvroSerDe and OpenCSVSerDe, US East (Ohio) region, and bulk editing columns in the

console wizard. Improved performance on large Parquet tables.

Features

• Introduced support for new SerDes:

• Avro SerDe (p. 109)

• OpenCSVSerDe for Processing CSV (p. 114)

• US East (Ohio) region (us-east-2) launch. You can now run queries in this region.

• You can now use the Add Table wizard to define table schema in bulk. Choose Catalog Manager, Add

table, and then choose Bulk add columns as you walk through the steps to define the table.

9

Amazon Athena User Guide

Features

Type name value pairs in the text box and choose Add.

10

Amazon Athena User Guide

Improvements

Improvements

• Improved performance on large Parquet tables.

11

Amazon Athena User Guide

Sign Up for AWS

Setting Up

If you've already signed up for Amazon Web Services (AWS), you can start using Amazon Athena

immediately. If you haven't signed up for AWS, or if you need assistance querying data using Athena, first

complete the tasks below:

Sign Up for AWS

When you sign up for AWS, your account is automatically signed up for all services in AWS, including

Athena. You are charged only for the services that you use. When you use Athena, you use Amazon S3 to

store your data. Athena has no AWS Free Tier pricing.

If you have an AWS account already, skip to the next task. If you don't have an AWS account, use the

following procedure to create one.

To create an AWS account

1. Open http://aws.amazon.com/, and then choose Create an AWS Account.

2. Follow the online instructions. Part of the sign-up procedure involves receiving a phone call and

entering a PIN using the phone keypad.

Note your AWS account number, because you need it for the next task.

Create an IAM User

An AWS Identity and Access Management (IAM) user is an account that you create to access services. It is

a different user than your main AWS account. As a security best practice, we recommend that you use the

IAM user's credentials to access AWS services. Create an IAM user, and then add the user to an IAM group

with administrative permissions or and grant this user administrative permissions. You can then access

AWS using a special URL and the credentials for the IAM user.

If you signed up for AWS but have not created an IAM user for yourself, you can create one using the IAM

console. If you aren't familiar with using the console, see Working with the AWS Management Console.

To create a group for administrators

1. Sign in to the IAM console at https://console.aws.amazon.com/iam/.

2. In the navigation pane, choose Groups, Create New Group.

3. For Group Name, type a name for your group, such as Administrators, and choose Next Step.

4. In the list of policies, select the check box next to the AdministratorAccess policy. You can use the

Filter menu and the Search field to filter the list of policies.

5. Choose Next Step, Create Group. Your new group is listed under Group Name.

12

Amazon Athena User Guide

To create an IAM user for yourself, add the user to the

administrators group, and create a password for the user

To create an IAM user for yourself, add the user to the

administrators group, and create a password for the

user

1. In the navigation pane, choose Users, and then Create New Users.

2. For 1, type a user name.

3. Clear the check box next to Generate an access key for each user and then Create.

4. In the list of users, select the name (not the check box) of the user you just created. You can use the

Search field to search for the user name.

5. Choose Groups, Add User to Groups.

6. Select the check box next to the administrators and choose Add to Groups.

7. Choose the Security Credentials tab. Under Sign-In Credentials, choose Manage Password.

8. Choose Assign a custom password. Then type a password in the Password and Confirm Password

fields. When you are finished, choose Apply.

9. To sign in as this new IAM user, sign out of the AWS console, then use the following URL, where

your_aws_account_id is your AWS account number without the hyphens (for example, if your AWS

account number is 1234-5678-9012, your AWS account ID is 123456789012):

https://*your_account_alias*.signin.aws.amazon.com/console/

It is also possible the sign-in link will use your account name instead of number. To verify the sign-in

link for IAM users for your account, open the IAM console and check under IAM users sign-in link on the

dashboard.

Attach Managed Policies for Using Athena

Attach Athena managed policies to the IAM account you use to access Athena. There are two managed

policies for Athena: AmazonAthenaFullAccess and AWSQuicksightAthenaAccess. These policies

grant permissions to Athena to query Amazon S3 as well as write the results of your queries to a

separate bucket on your behalf. For more information and step-by-step instructions, see Attaching

Managed Policies in the AWS Identity and Access Management User Guide. For information about policy

contents, see IAM Policies for User Access (p. 37).

Note

You may need additional permissions to access the underlying dataset in Amazon S3. If

you are not the account owner or otherwise have restricted access to a bucket, contact the

bucket owner to grant access using a resource-based bucket policy, or contact your account

administrator to grant access using an identity-based policy. For more information, see Amazon

S3 Permissions (p. 40). If the dataset or Athena query results are encrypted, you may need

additional permissions. For more information, see Configuring Encryption Options (p. 41).

13

Amazon Athena User Guide

Prerequisites

Getting Started

This tutorial walks you through using Amazon Athena to query data. You'll create a table based on

sample data stored in Amazon Simple Storage Service, query the table, and check the results of the

query.

The tutorial is using live resources, so you are charged for the queries that you run. You aren't charged

for the sample datasets that you use, but if you upload your own data files to Amazon S3, charges do

apply.

Prerequisites

If you have not already done so, sign up for an account in Setting Up (p. 12).

Step 1: Create a Database

You first need to create a database in Athena.

To create a database

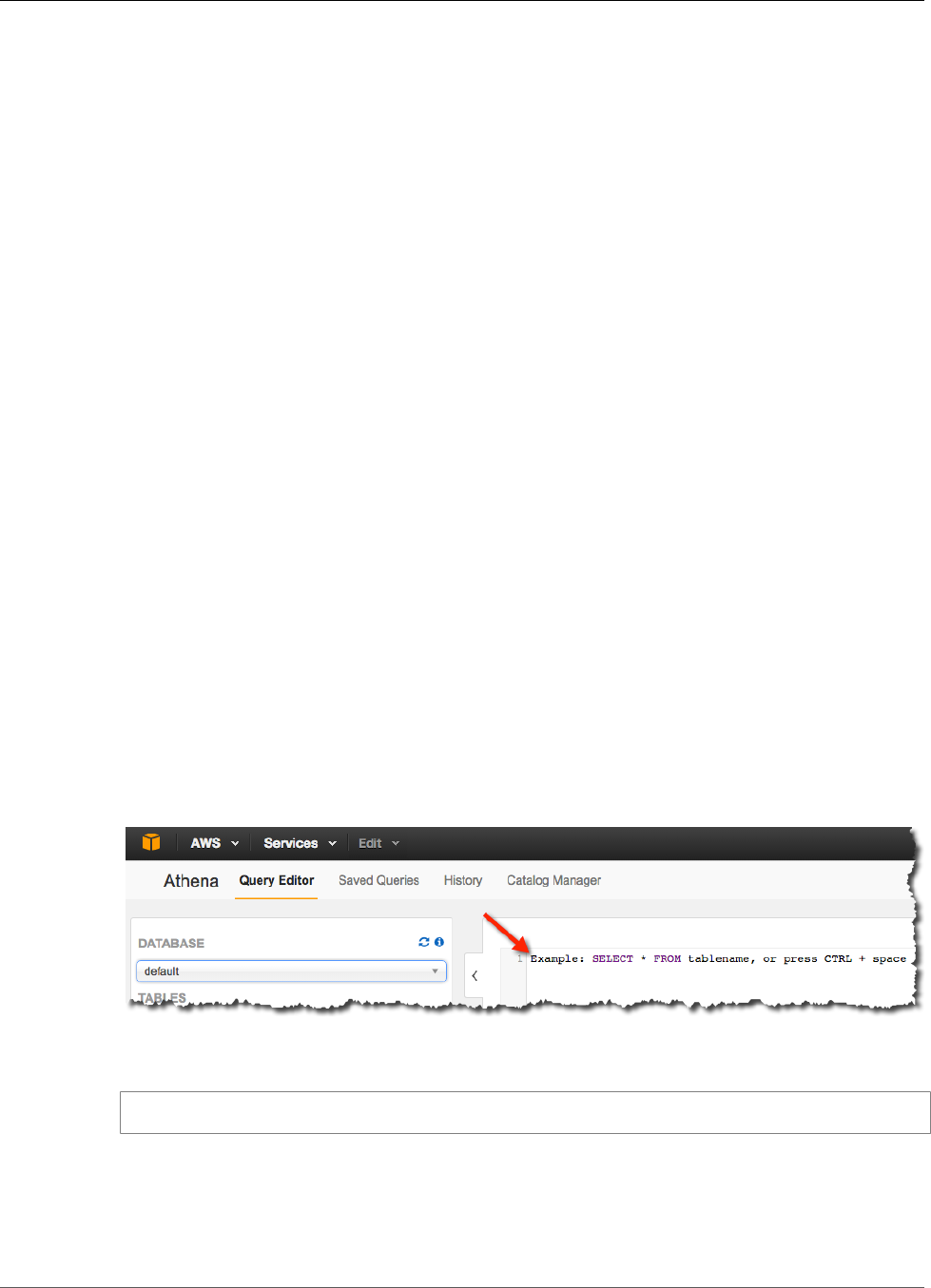

1. Open the Athena console.

2. If this is your first time visiting the Athena console, you'll go to a Getting Started page. Choose Get

Started to open the Query Editor. If it isn't your first time, the Athena Query Editor opens.

3. In the Athena Query Editor, you see a query pane with an example query. Start typing your query

anywhere in the query pane.

4. To create a database named mydatabase, enter the following CREATE DATABASE statement, and

then choose Run Query:

CREATE DATABASE mydatabase

5. Confirm that the catalog display refreshes and mydatabase appears in the DATABASE list in the

Catalog dashboard on the left side.

14

Amazon Athena User Guide

Step 2: Create a Table

Step 2: Create a Table

Now that you have a database, you're ready to create a table that's based on the sample data file. You

define columns that map to the data, specify how the data is delimited, and provide the location in

Amazon S3 for the file.

To create a table

1. Make sure that mydatabase is selected for DATABASE and then choose New Query.

2. In the query pane, enter the following CREATE TABLE statement, and then choose Run Query:

Note

You can query data in regions other than the region where you run Athena. Standard inter-

region data transfer rates for Amazon S3 apply in addition to standard Athena charges. To

reduce data transfer charges, replace myregion in s3://athena-examples-myregion/

path/to/data/ with the region identifier where you run Athena, for example, s3://

athena-examples-us-east-1/path/to/data/.

CREATE EXTERNAL TABLE IF NOT EXISTS cloudfront_logs (

`Date` DATE,

Time STRING,

Location STRING,

Bytes INT,

RequestIP STRING,

Method STRING,

Host STRING,

Uri STRING,

Status INT,

Referrer STRING,

os STRING,

Browser STRING,

BrowserVersion STRING

) ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.RegexSerDe'

WITH SERDEPROPERTIES (

"input.regex" = "^(?!#)([^ ]+)\\s+([^ ]+)\\s+([^ ]+)\\s+([^ ]+)\\s+([^ ]+)\\s

+([^ ]+)\\s+([^ ]+)\\s+([^ ]+)\\s+([^ ]+)\\s+([^ ]+)\\s+[^\(]+[\(]([^\;]+).*\%20([^

\/]+)[\/](.*)$"

) LOCATION 's3://athena-examples-myregion/cloudfront/plaintext/';

The table cloudfront_logs is created and appears in the Catalog dashboard for your database.

15

Amazon Athena User Guide

Step 3: Query Data

Step 3: Query Data

Now that you have the cloudfront_logs table created in Athena based on the data in Amazon S3, you

can run queries on the table and see the results in Athena.

To run a query

1. Choose New Query, enter the following statement anywhere in the query pane, and then choose

Run Query:

SELECT os, COUNT(*) count

FROM cloudfront_logs

WHERE date BETWEEN date '2014-07-05' AND date '2014-08-05'

GROUP BY os;

Results are returned that look like the following:

16

Amazon Athena User Guide

Step 3: Query Data

2. Optionally, you can save the results of a query to CSV by choosing the file icon on the Results pane.

You can also view the results of previous queries or queries that may take some time to complete.

Choose History then either search for your query or choose View or Download to view or download the

results of previous completed queries. This also displays the status of queries that are currently running.

Query history is retained for 45 days. For information, see Viewing Query History (p. 60).

Query results are also stored in Amazon S3 in a bucket called aws-athena-query-

results-ACCOUNTID-REGION. You can change the default location in the console and encryption options

by choosing Settings in the upper right pane. For more information, see Query Results (p. 59).

17

Amazon Athena User Guide

Integration with AWS Glue

AWS Glue is a fully managed ETL (extract, transform, and load) service that can categorize your data,

clean it, enrich it, and move it reliably between various data stores. AWS Glue crawlers automatically

infer database and table schema from your source data, storing the associated metadata in the AWS Glue

Data Catalog. When you create a table in Athena, you can choose to create it using an AWS Glue crawler.

In regions where AWS Glue is supported, Athena uses the AWS Glue Data Catalog as a central location

to store and retrieve table metadata throughout an AWS account. The Athena execution engine requires

table metadata that instructs it where to read data, how to read it, and other information necessary to

process the data. The AWS Glue Data Catalog provides a unified metadata repository across a variety

of data sources and data formats, integrating not only with Athena, but with Amazon S3, Amazon RDS,

Amazon Redshift, Amazon Redshift Spectrum, Amazon EMR, and any application compatible with the

Apache Hive metastore.

For more information about the AWS Glue Data Catalog, see Populating the AWS Glue Data Catalog

in the AWS Glue Developer Guide. For a list of regions where AWS Glue is available, see Regions and

Endpoints in the AWS General Reference.

Separate charges apply to AWS Glue. For more information, see AWS Glue Pricing and Are there separate

charges for AWS Glue? (p. 22) For more information about the benefits of using AWS Glue with

Athena, see Why should I upgrade to the AWS Glue Data Catalog? (p. 21)

Topics

• Upgrading to the AWS Glue Data Catalog Step-by-Step (p. 19)

• FAQ: Upgrading to the AWS Glue Data Catalog (p. 21)

• Best Practices When Using Athena with AWS Glue (p. 23)

18

Amazon Athena User Guide

Upgrading to the AWS Glue Data Catalog Step-by-Step

Upgrading to the AWS Glue Data Catalog Step-by-

Step

Amazon Athena manages its own data catalog until the time that AWS Glue releases in the Athena

region. At that time, if you previously created databases and tables using Athena or Amazon Redshift

Spectrum, you can choose to upgrade Athena to the AWS Glue Data Catalog. If you are new to Athena,

you don't need to make any changes; databases and tables are available to Athena using the AWS Glue

Data Catalog and vice versa. For more information about the benefits of using the AWS Glue Data

Catalog, see FAQ: Upgrading to the AWS Glue Data Catalog (p. 21). For a list of regions where AWS

Glue is available, see Regions and Endpoints in the AWS General Reference.

Until you upgrade, the Athena-managed data catalog continues to store your table and database

metadata, and you see the option to upgrade at the top of the console. The metadata in the Athena-

managed catalog isn't available in the AWS Glue Data Catalog or vice versa. While the catalogs exist side-

by-side, you aren't able to create tables or databases with the same names, and the creation process in

either AWS Glue or Athena fails in this case.

We created a wizard in the Athena console to walk you through the steps of upgrading to the AWS

Glue console. The upgrade takes just a few minutes, and you can pick up where you left off. For more

information about each upgrade step, see the topics in this section. For more information about working

with data and tables in the AWS Glue Data Catalog, see the guidelines in Best Practices When Using

Athena with AWS Glue (p. 23).

Step 1 - Allow a User to Perform the Upgrade

By default, the action that allows a user to perform the upgrade is not allowed in any policy, including

any managed policies. Because the AWS Glue Data Catalog is shared throughout an account, this extra

failsafe prevents someone from accidentally migrating the catalog.

Before the upgrade can be performed, you need to attach a customer-managed IAM policy, with a policy

statement that allows the upgrade action, to the user who performs the migration.

The following is an example policy statement.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"glue:ImportCatalogToGlue "

],

"Resource": [ "*" ]

}

]

}

Step 2 - Update Customer-Managed/Inline Policies

Associated with Athena Users

If you have customer-managed or inline IAM policies associated with Athena users, you need to update

the policy or policies to allow actions that AWS Glue requires. If you use the managed policy, they are

automatically updated. The AWS Glue policy actions to allow are listed in the example policy below. For

the full policy statement, see IAM Policies for User Access (p. 37).

19

Amazon Athena User Guide

Step 3 - Choose Upgrade in the Athena Console

{

"Effect":"Allow",

"Action":[

"glue:CreateDatabase",

"glue:DeleteDatabase",

"glue:GetDatabase",

"glue:GetDatabases",

"glue:UpdateDatabase",

"glue:CreateTable",

"glue:DeleteTable",

"glue:BatchDeleteTable",

"glue:UpdateTable",

"glue:GetTable",

"glue:GetTables",

"glue:BatchCreatePartition",

"glue:CreatePartition",

"glue:DeletePartition",

"glue:BatchDeletePartition",

"glue:UpdatePartition",

"glue:GetPartition",

"glue:GetPartitions",

"glue:BatchGetPartition"

],

"Resource":[

"*"

]

}

Step 3 - Choose Upgrade in the Athena Console

After you make the required IAM policy updates, choose Upgrade in the Athena console. Athena moves

your metadata to the AWS Glue Data Catalog. The upgrade takes only a few minutes. After you upgrade,

the Athena console has a link to open the AWS Glue Catalog Manager from within Athena.

When you create a table using the console, you now have the option to create a table using an AWS Glue

crawler. For more information, see Using AWS Glue Crawlers (p. 24).

20

Amazon Athena User Guide

FAQ: Upgrading to the AWS Glue Data Catalog

FAQ: Upgrading to the AWS Glue Data Catalog

If you created databases and tables using Athena in a region before AWS Glue was available in that

region, metadata is stored in an Athena-managed data catalog, which only Athena and Amazon Redshift

Spectrum can access. To use AWS Glue features together with Athena and Redshift Spectrum, you must

upgrade to the AWS Glue Data Catalog. Athena can only be used together with the AWS Glue Data

Catalog in regions where AWS Glue is available. For a list of regions, see Regions and Endpoints in the

AWS General Reference.

Why should I upgrade to the AWS Glue Data Catalog?

AWS Glue is a completely-managed extract, transform, and load (ETL) service. It has three main

components:

• An AWS Glue crawler can automatically scan your data sources, identify data formats, and infer

schema.

• A fully managed ETL service allows you to transform and move data to various destinations.

• The AWS Glue Data Catalog stores metadata information about databases and tables, pointing to a

data store in Amazon S3 or a JDBC-compliant data store.

For more information, see AWS Glue Concepts.

Upgrading to the AWS Glue Data Catalog has the following benefits.

Unified metadata repository

The AWS Glue Data Catalog provides a unified metadata repository across a variety of data sources

and data formats. It provides out-of-the-box integration with Amazon Simple Storage Service (Amazon

S3), Amazon Relational Database Service (Amazon RDS), Amazon Redshift, Amazon Redshift Spectrum,

Athena, Amazon EMR, and any application compatible with the Apache Hive metastore. You can create

your table definitions one time and query across engines.

21

Amazon Athena User Guide

Are there separate charges for AWS Glue?

For more information, see Populating the AWS Glue Data Catalog.

Automatic schema and partition recognition

AWS Glue crawlers automatically crawl your data sources, identify data formats, and suggest schema and

transformations. Crawlers can help automate table creation and automatic loading of partitions that you

can query using Athena, Amazon EMR, and Redshift Spectrum. You can also create tables and partitions

directly using the AWS Glue API, SDKs, and the AWS CLI.

For more information, see Cataloging Tables with a Crawler.

Easy-to-build pipelines

The AWS Glue ETL engine generates Python code that is entirely customizable, reusable, and portable.

You can edit the code using your favorite IDE or notebook and share it with others using GitHub. After

your ETL job is ready, you can schedule it to run on the fully managed, scale-out Spark infrastructure of

AWS Glue. AWS Glue handles provisioning, configuration, and scaling of the resources required to run

your ETL jobs, allowing you to tightly integrate ETL with your workflow.

For more information, see Authoring AWS Glue Jobs in the AWS Glue Developer Guide.

Are there separate charges for AWS Glue?

Yes. With AWS Glue, you pay a monthly rate for storing and accessing the metadata stored in the AWS

Glue Data Catalog, an hourly rate billed per second for AWS Glue ETL jobs and crawler runtime, and an

hourly rate billed per second for each provisioned development endpoint. The AWS Glue Data Catalog

allows you to store up to a million objects at no charge. If you store more than a million objects, you

are charged USD$1 for each 100,000 objects over a million. An object in the AWS Glue Data Catalog is a

table, a partition, or a database. For more information, see AWS Glue Pricing.

Upgrade process FAQ

• Who can perform the upgrade? (p. 22)

• My users use a managed policy with Athena and Redshift Spectrum. What steps do I need to take to

upgrade? (p. 22)

• What happens if I don’t upgrade? (p. 23)

• Why do I need to add AWS Glue policies to Athena users? (p. 23)

• What happens if I don’t allow AWS Glue policies for Athena users? (p. 23)

• Is there risk of data loss during the upgrade? (p. 23)

• Is my data also moved during this upgrade? (p. 23)

Who can perform the upgrade?

You need to attach a customer-managed IAM policy with a policy statement that allows the upgrade

action to the user who will perform the migration. This extra check prevents someone from accidentally

migrating the catalog for the entire account. For more information, see Step 1 - Allow a User to Perform

the Upgrade (p. 19).

My users use a managed policy with Athena and Redshift

Spectrum. What steps do I need to take to upgrade?

The Athena managed policy has been automatically updated with new policy actions that allow Athena

users to access AWS Glue. However, you still must explicitly allow the upgrade action for the user who

performs the upgrade. To prevent accidental upgrade, the managed policy does not allow this action.

22

Amazon Athena User Guide

Best Practices When Using Athena with AWS Glue

What happens if I don’t upgrade?

If you don’t upgrade, you are not able to use AWS Glue features together with the databases and tables

you create in Athena or vice versa. You can use these services independently. During this time, Athena

and AWS Glue both prevent you from creating databases and tables that have the same names in the

other data catalog. This prevents name collisions when you do upgrade.

Why do I need to add AWS Glue policies to Athena users?

Before you upgrade, Athena manages the data catalog, so Athena actions must be allowed for your users

to perform queries. After you upgrade to the AWS Glue Data Catalog, Athena actions no longer apply to

accessing the AWS Glue Data Catalog, so AWS Glue actions must be allowed for your users. Remember,

the managed policy for Athena has already been updated to allow the required AWS Glue actions, so no

action is required if you use the managed policy.

What happens if I don’t allow AWS Glue policies for Athena

users?

If you upgrade to the AWS Glue Data Catalog and don't update a user's customer-managed or inline IAM

policies, Athena queries fail because the user won't be allowed to perform actions in AWS Glue. For the

specific actions to allow, see Step 2 - Update Customer-Managed/Inline Policies Associated with Athena

Users (p. 19).

Is there risk of data loss during the upgrade?

No.

Is my data also moved during this upgrade?

No. The migration only affects metadata.

Best Practices When Using Athena with AWS Glue

When using Athena with the AWS Glue Data Catalog, you can use AWS Glue to create databases and

tables (schema) to be queried in Athena, or you can use Athena to create schema and then use them in

AWS Glue and related services. This topic provides considerations and best practices when using either

method.

Under the hood, Athena uses Presto to execute DML statements and Hive to execute the DDL statements

that create and modify schema. With these technologies, there are a couple conventions to follow so

that Athena and AWS Glue work well together.

In this topic

• Database, Table, and Column Names (p. 24)

• Using AWS Glue Crawlers (p. 24)

• Scheduling a Crawler to Keep the AWS Glue Data Catalog and Amazon S3 in Sync (p. 24)

• Using Multiple Data Sources with Crawlers (p. 25)

• Syncing Partition Schema to Avoid "HIVE_PARTITION_SCHEMA_MISMATCH" (p. 27)

• Updating Table Metadata (p. 27)

23

Amazon Athena User Guide

Database, Table, and Column Names

• Working with CSV Files (p. 28)

• CSV Data Enclosed in Quotes (p. 28)

• CSV Files with Headers (p. 30)

• Using AWS Glue Jobs for ETL with Athena (p. 30)

• Creating Tables Using Athena for AWS Glue ETL Jobs (p. 30)

• Using ETL Jobs to Optimize Query Performance (p. 31)

• Converting SMALLINT and TINYINT Datatypes to INT When Converting to ORC (p. 32)

• Changing Date Data Types to String for Parquet ETL Transformation (p. 32)

• Automating AWS Glue Jobs for ETL (p. 32)

Database, Table, and Column Names

When you create schema in AWS Glue to query in Athena, consider the following:

• A database name cannot be longer than 252 characters.

• A table name cannot be longer than 255 characters.

• A column name cannot be longer than 128 characters.

• The only acceptable characters for database names, table names, and column names are lowercase

letters, numbers, and the underscore character.

You can use the AWS Glue Catalog Manager to rename columns, but at this time table names and

database names cannot be changed using the AWS Glue console. To correct database names, you need to

create a new database and copy tables to it (in other words, copy the metadata to a new entity). You can

follow a similar process for tables. You can use the AWS Glue SDK or AWS CLI to do this.

Using AWS Glue Crawlers

AWS Glue crawlers help discover and register the schema for datasets in the AWS Glue Data Catalog.

The crawlers go through your data, and inspect portions of it to determine the schema. In addition, the

crawler can detect and register partitions. For more information, see Cataloging Data with a Crawler in

the AWS Glue Developer Guide.

Scheduling a Crawler to Keep the AWS Glue Data Catalog and

Amazon S3 in Sync

AWS Glue crawlers can be set up to run on a schedule or on demand. For more information, see Time-

Based Schedules for Jobs and Crawlers in the AWS Glue Developer Guide.

If you have data that arrives for a partitioned table at a fixed time, you can set up an AWS Glue crawler

to run on schedule to detect and update table partitions. This can eliminate the need to run a potentially

long and expensive MSCK REPAIR command or manually execute an ALTER TABLE ADD PARTITION

command. For more information, see Table Partitions in the AWS Glue Developer Guide.

24

Amazon Athena User Guide

Using AWS Glue Crawlers

Using Multiple Data Sources with Crawlers

When an AWS Glue crawler scans Amazon S3 and detects multiple directories, it uses a heuristic to

determine where the root for a table is in the directory structure, and which directories are partitions

for the table. In some cases, where the schema detected in two or more directories is similar, the crawler

may treat them as partitions instead of separate tables. One way to help the crawler discover individual

tables is to add each table's root directory as a data store for the crawler.

The following partitions in Amazon S3 are an example:

s3://bucket01/folder1/table1/partition1/file.txt

s3://bucket01/folder1/table1/partition2/file.txt

s3://bucket01/folder1/table1/partition3/file.txt

s3://bucket01/folder1/table2/partition4/file.txt

s3://bucket01/folder1/table2/partition5/file.txt

If the schema for table1 and table2 are similar, and a single data source is set to s3://bucket01/

folder1/ in AWS Glue, the crawler may create a single table with two partition columns: one partition

column that contains table1 and table2, and a second partition column that contains partition1

through partition5.

To have the AWS Glue crawler create two separate tables as intended, use the AWS Glue console to set

the crawler to have two data sources, s3://bucket01/folder1/table1/ and s3://bucket01/

folder1/table2, as shown in the following procedure.

To add another data store to an existing crawler in AWS Glue

1. In the AWS Glue console, choose Crawlers, select your crawler, and then choose Action, Edit crawler.

25

Amazon Athena User Guide

Using AWS Glue Crawlers

2. Under Add information about your crawler, choose additional settings as appropriate, and then

choose Next.

3. Under Add a data store, change Include path to the table-level directory. For instance, given the

example above, you would change it from s3://bucket01/folder1 to s3://bucket01/folder1/table1/.

Choose Next.

4. For Add another data store, choose Yes, Next.

26

Amazon Athena User Guide

Using AWS Glue Crawlers

5. For Include path, enter your other table-level directory (for example, s3://bucket01/folder1/table2/)

and choose Next.

a. Repeat steps 3-5 for any additional table-level directories, and finish the crawler configuration.

The new values for Include locations appear under data stores

Syncing Partition Schema to Avoid

"HIVE_PARTITION_SCHEMA_MISMATCH"

For each table within the AWS Glue Data Catalog that has partition columns, the schema is stored

at the table level and for each individual partition within the table. The schema for partitions are

populated by an AWS Glue crawler based on the sample of data that it reads within the partition. For

more information, see Using Multiple Data Sources with Crawlers (p. 25).

When Athena runs a query, it validates the schema of the table and the schema of any partitions

necessary for the query. The validation compares the column data types in order and makes sure

that they match for the columns that overlap. This prevents unexpected operations such as adding